Jul 11, 2025

How AI in Recruiting is Fueling the Rise of Fake Job Seekers and Undermining Remote Hiring

The rapid advancement of AI technology has brought remarkable innovations to many fields — recruiting included. However, as AI in recruiting becomes more sophisticated, it has also opened the door for new challenges that threaten the integrity of the hiring process. One of the most alarming developments is the increasing prevalence of fake job seekers who use AI-powered deepfake technology to impersonate others during remote interviews.

This article dives into the growing problem of AI-generated fake candidates, the security risks they pose to companies, and the national security implications tied to their activities. We will also explore how remote work trends have inadvertently fueled this issue and what organizations can do to adapt and protect themselves.

The Rise of Deepfake Job Seekers in the Era of Remote Work

The shift to remote work, accelerated by the COVID-19 pandemic, revolutionized the hiring landscape. Suddenly, companies were forced to conduct virtual interviews and onboard employees remotely, opening up access to a global talent pool. While this expanded opportunities for many, it also created a perfect breeding ground for fraudulent job seekers using deepfake technology.

Deepfake technology allows individuals to alter or entirely fabricate video and audio content, creating convincing yet fake personas. As explained by cybersecurity experts, all that’s needed to deepfake oneself is a static image and a few seconds of audio, making it accessible and inexpensive for bad actors.

Consequently, these fake candidates can appear as legitimate applicants from anywhere in the world — including sanctioned nations — while hiding their true identities. They often submit forged credentials, fake resumes, or counterfeit LinkedIn profiles to gain access to coveted remote roles.

Research from Gartner predicts that by 2024, one in four job candidates worldwide will be fake, and current data already shows alarming rates. Across multiple job postings, approximately 16.8% of applicants have been identified as fraudulent, meaning one out of every six candidates applying to your company could be fake.

Why Remote Hiring Amplifies the Risk

- Virtual interviews are easier to fake: Unlike in-person interviews, video calls allow candidates to use AI filters or deepfake software to mask their true identities.

- Lower barriers to entry: Remote roles do not require physical presence, enabling candidates from restricted regions or with visa challenges to apply using fake personas.

- Cost and convenience: Companies prefer virtual interviews for scheduling ease and cost savings, but this convenience comes with increased vulnerability.

Fake candidates often immediately inquire if the position is remote — a red flag, as fraudulent applicants cannot show up to an office regularly. This pattern has made remote hiring a prime target for infiltration by deepfake job seekers.

Corporate Security Risks Posed by Fake Employees

Hiring a fake employee is not just an HR headache — it’s a major cybersecurity threat. Once inside an organization, these imposters can access sensitive data, steal proprietary information, and introduce vulnerabilities through malicious or poorly written code.

According to a survey by Resume Genius, about 17% of U.S. hiring managers have encountered candidates using deepfake technology during interviews. This statistic highlights how widespread the problem has already become.

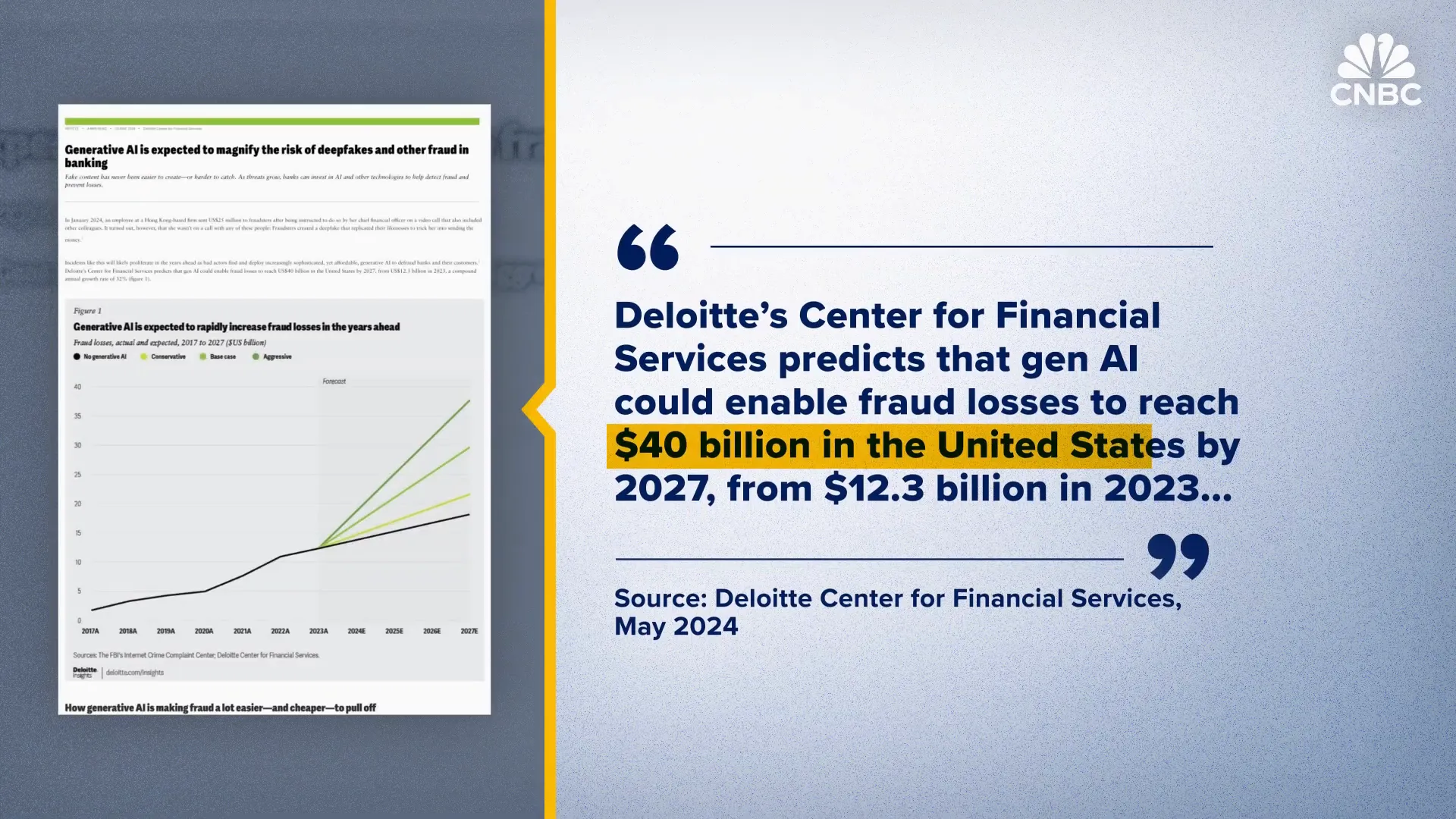

Deepfake scams have cost companies millions worldwide, and the financial impact is projected to escalate dramatically. The AI-generated fraud sector, including deepfakes, could cause losses of up to $40 billion in the U.S. financial sector by 2027 — a staggering increase from $12.3 billion in 2023.

Many of these fraudulent candidates are motivated simply by financial gain. Some seek just a paycheck or two before disappearing, while others remain on the payroll long-term, funneling money back to criminal networks or sanctioned regimes.

How Fake Employees Exploit Corporate Systems

- Gain unauthorized access to company servers and sensitive data.

- Steal or manipulate confidential information.

- Collaborate with insiders or external actors to infiltrate further systems.

- Introduce malicious code or vulnerabilities that open doors to cyberattacks.

The consequences of such breaches can be devastating, ranging from intellectual property theft to reputational damage and regulatory penalties.

North Korea and the National Security Dimension

The issue of fake job seekers takes on an even more serious tone when linked to sanctioned nations like North Korea. In 2024, the U.S. Justice Department revealed that over 300 American companies were unknowingly employing imposters connected to North Korea for remote IT roles. These workers used stolen American identities and sophisticated methods to conceal their locations, funneling at least $6.8 million back to the North Korean regime.

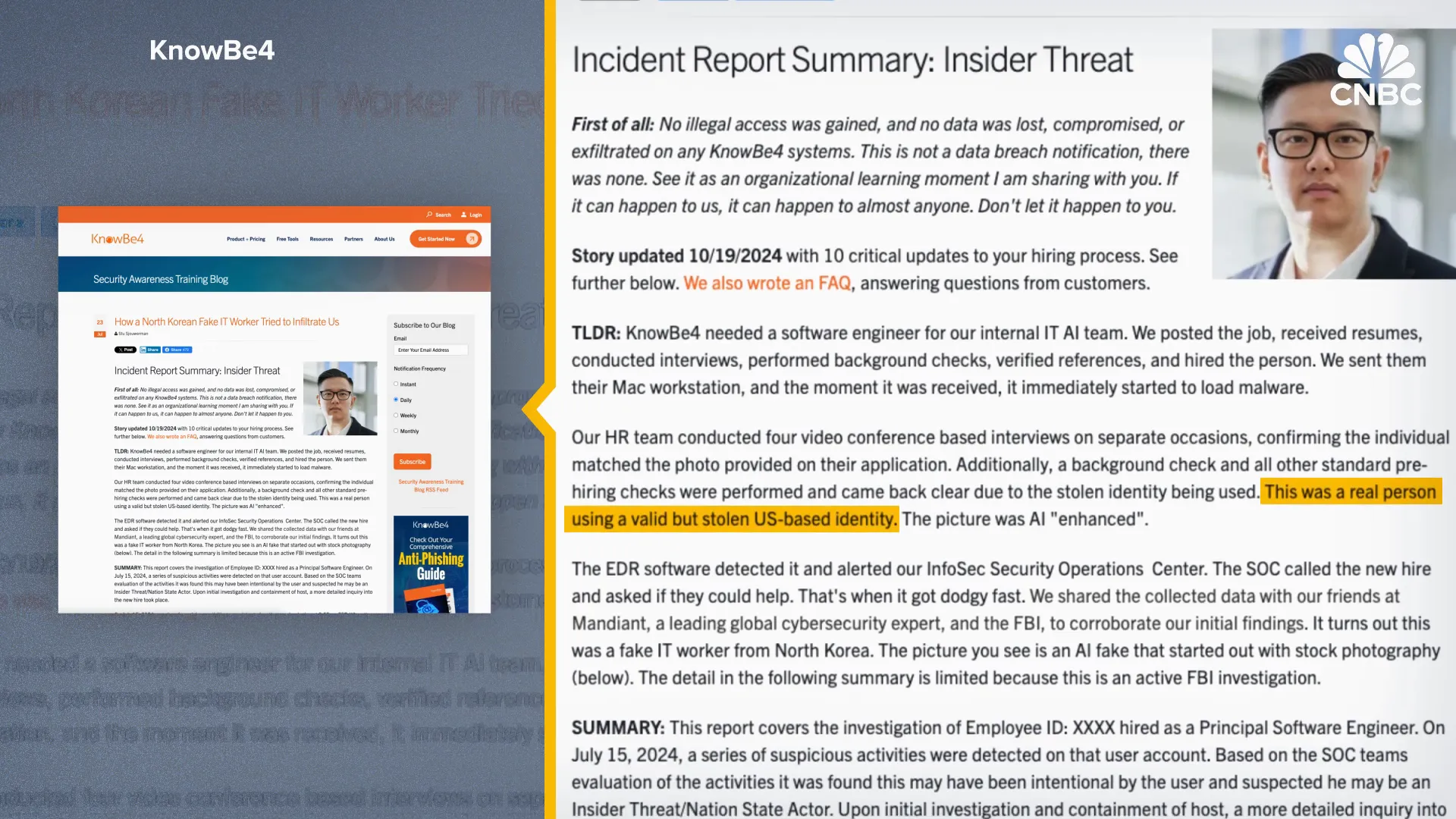

Even cybersecurity firms have fallen victim. One notable incident involved KnowBe4 unknowingly hiring a North Korean IT worker with a stolen U.S. identity. Although the initial interviews and credentials appeared legitimate, the new employee attempted to install a password-stealing Trojan on a company laptop shortly after onboarding. Thanks to quick IT security intervention, the threat was neutralized within minutes.

Voice authentication security company Pindrop Security also uncovered deepfake candidates from regions near North Korea, including a case dubbed “Ivan x,” who falsely claimed to be from the U.S. or Europe but was traced back to Russia’s Karboos region near the North Korean border.

Of all candidates Pindrop reviews, one in 343 is linked to North Korea, and among those, one in four use deepfake technology during live interviews. This pattern underscores the serious national security risks posed by these fake employees.

Why This Matters to National Security

- Illicit funding: Salaries paid to these fake employees fund activities of sanctioned regimes, inadvertently supporting illicit operations.

- Adversarial AI attacks: Fake employees can poison internal algorithms, causing malicious or biased outputs that disrupt organizational and societal functions.

- Social disruption: Manipulated AI outputs can exacerbate racial or societal divides, posing broader security challenges.

These factors elevate the problem beyond corporate risk into a pressing national security concern.

What’s Next: Adapting Hiring Practices to Combat Deepfake Candidates

As the number of fake job seekers continues to grow, companies must rethink their remote hiring strategies to safeguard their workforce and data. Some potential responses include:

- Return to in-person interviews: Requiring offline interviews before extending remote job offers can help verify candidate authenticity, though this approach increases costs and logistical complexity.

- Enhanced verification measures: Implementing multi-factor identity verification, biometric authentication, and AI tools designed to detect deepfakes can strengthen candidate screening.

- Bias and fairness considerations: Employers need to balance security with fairness to avoid discrimination or undue preference for local candidates simply to avoid remote verification challenges.

- Ongoing monitoring: Continuous security monitoring of new hires’ activities can detect suspicious behavior early and prevent damage.

While these measures may increase recruitment costs and slow hiring processes, they are critical to maintaining trust and security in the evolving job market.

The Broader Impact on Genuine Job Seekers

One of the most troubling consequences of the rise in fake candidates is the impact on legitimate applicants. When employers become wary of deepfake applicants, genuine candidates may face longer hiring cycles, increased scrutiny, or even rejection due to mistaken suspicions.

This “sand in the gears” effect not only frustrates honest job seekers but also hampers companies’ ability to efficiently fill roles, potentially leading to fewer hires and lost opportunities on both sides.

Ultimately, the challenge is to develop hiring practices that minimize fraud without creating unnecessary barriers for real candidates.

Conclusion

The integration of AI in recruiting offers incredible benefits but also presents new risks, especially as deepfake technology is weaponized by fake job seekers. The rise of remote work has inadvertently enabled these fraudulent actors to infiltrate the labor market, posing serious cybersecurity, financial, and national security threats.

Organizations must respond proactively by updating their hiring protocols, investing in verification technologies, and balancing the need for security with fairness and efficiency. Failure to do so risks not only corporate data and finances but also the broader stability of social and national systems.

As AI continues to reshape the world of work, vigilance and innovation in recruitment practices will be essential to ensure that the future of hiring is both secure and equitable.