Anthropic's 1M Token Leap and What It Means for AI in Recruiting and Beyond

I'm the creator behind The AI Daily Brief: Artificial Intelligence News, and in this post I’ll walk you through the biggest developments I covered in a recent briefing — including Anthropic's release of a one million token context window for Claude Sonnet 4 — and why these advances matter not only to developers but to anyone thinking about AI in recruiting, enterprise adoption, and long-lived agentic workflows.

If you care about AI in recruiting, you're tracking how models handle long documents, retain context, and integrate into business systems. These are the exact areas where the recent announcements from Anthropic, OpenAI, Google, and other players are shifting the competitive landscape.

Why a million-token context window matters

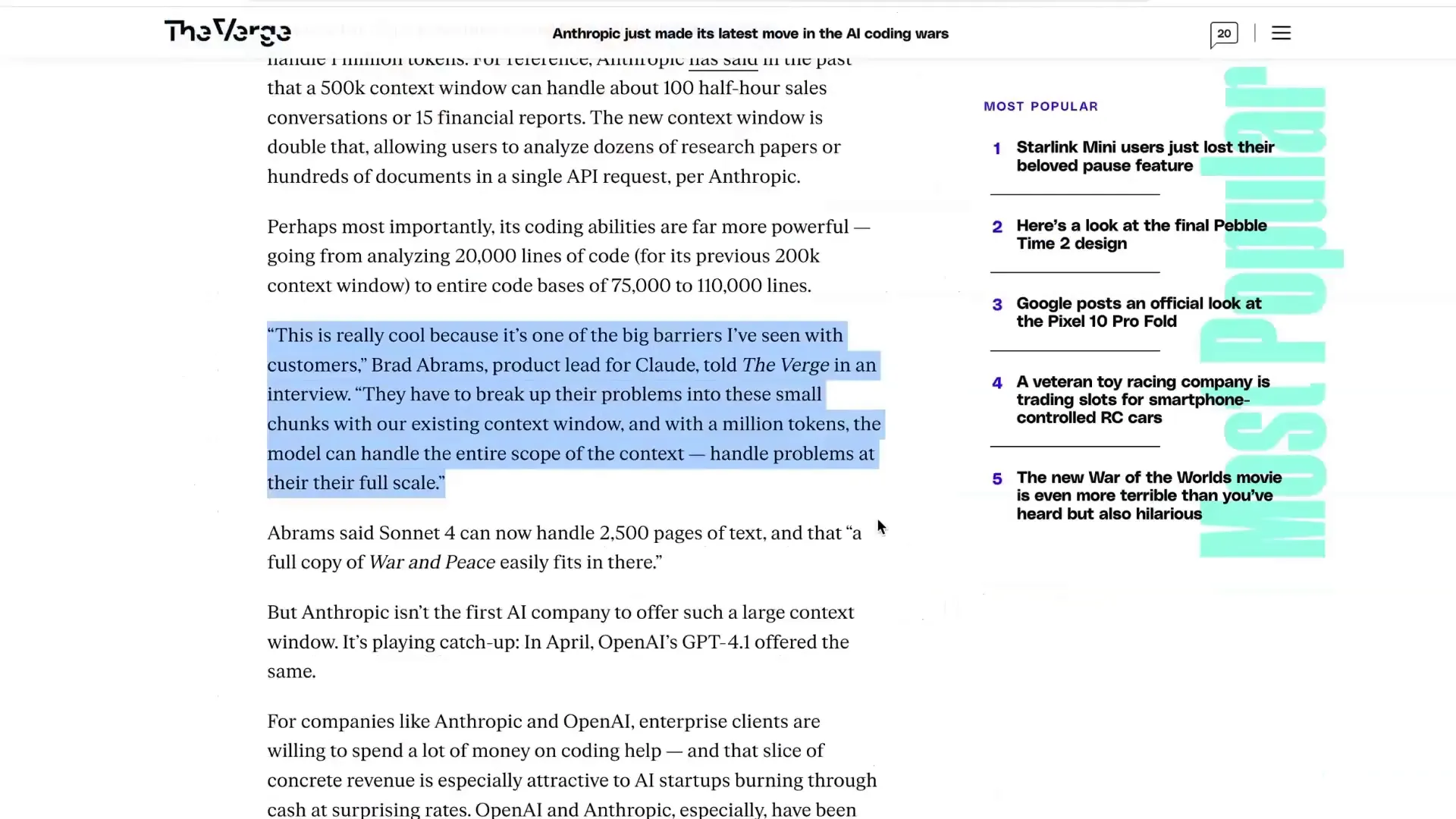

Anthropic recently announced that Claude Sonnet 4 can now process up to one million tokens in a single request. To put that in practical terms: that’s equivalent to roughly 75,000 lines of code or extremely large media and document collections being ingested without the need to slice the data into many smaller pieces.

For engineers and product teams, that’s a game changer. Previously, large codebases and long legal documents had to be chunked, indexed, and stitched back together for analysis. The million-token window reduces friction, simplifies pipelines, and enables the model to reason over entire bodies of work in one shot. That has direct implications for how organizations might evaluate candidate submissions, parse long resumes, and assess complex portfolios when they use AI in recruiting workflows.

Needle-in-a-haystack performance

Anthropic claims strong performance on internal “needle in the haystack” tests, reporting 100% success in accurately searching the entire context window. Claude’s product lead, Brad Abrams, emphasized that a larger context lets customers avoid breaking problems into smaller chunks and instead "handle problems at their full scale."

"They have to break up their problems into these small chunks with our existing context window, and with a million tokens, the model can handle the entire scope of the context."

That scale is valuable for complex agentic tasks — persistent, autonomous processes that run in the background and coordinate multiple steps over time. Imagine an AI-driven recruiting assistant that can read an entire applicant's portfolio, past communications, and test submissions in one pass and then reason about fit, compensation history, references, and culture match. That kind of long-horizon capability nudges AI in recruiting toward far richer, more accurate decision support.

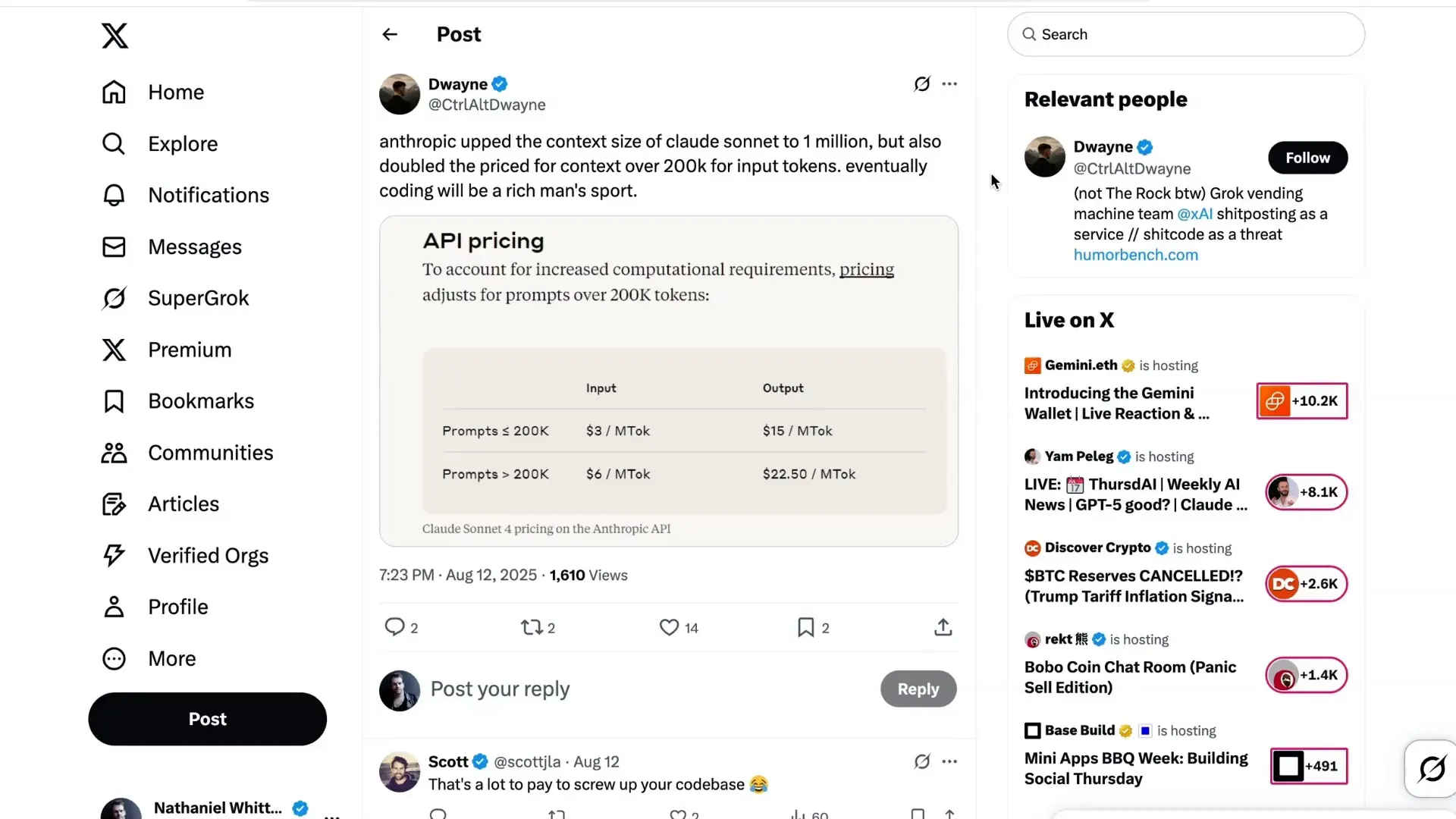

Pricing, access, and the enterprise play

Right now, Anthropic's million-token feature is offered to select high-paying API customers with custom rate limits. Pricing is a live debate: for inputs beyond about 200,000 tokens they doubled prices for some tiers, which raises questions about long-term cost dynamics. Cost and efficiency are two sides of the same coin — customers will weigh performance gains against recurring expense.

Cost matters for adoption of AI in recruiting because recruiting workflows often run at scale. Whether it’s screening thousands of applicants or processing gigabytes of candidate work samples, repeated consumption of large context windows could add up. Enterprises will need to balance the benefits of a single-pass, high-fidelity review against per-request costs and build strategies for efficient usage.

- Short-term: pay for high-quality, single-shot analyses where accuracy and time-savings justify cost.

- Long-term: build hybrid pipelines that pre-filter or summarize content before invoking full-window requests.

Government pricing and strategic access

Anthropic matched OpenAI's gesture of providing models to government entities for a nominal fee — purchasing access for just one dollar — and extended their offer to all three branches of government, including the judiciary and Congress. This move has procurement implications and a potential "soft power" dimension: when government staff become familiar with tools, it can shape future policy and procurement decisions.

For firms thinking about AI in recruiting, government adoption matters indirectly: it influences regulatory expectations, sets precedents for privacy and data governance, and can accelerate standards that enterprises will have to meet when deploying hiring tools.

Acquihire: HumanLoop and the tooling gap

Anthropic has also acquihired the team from HumanLoop, a startup focused on prompt management, evaluation (evals), and observability for enterprise LLM deployments. While the company said it didn’t purchase HumanLoop’s IP, bringing the team in signals that Anthropic wants to build more of the enterprise-grade tooling that companies need to run reliable, safe AI systems.

Enterprise adoption of AI in recruiting depends on robust tooling for:

- Prompt versioning and governance

- Automated evaluations and metrics aligned to HR outcomes

- Observability and auditing for bias, fairness, and legal compliance

Evaluation tooling is currently a major gap. By integrating expertise from HumanLoop, Anthropic is positioning itself to offer not just models but the surrounding infrastructure that enterprises — including HR and recruiting teams — require to operate at scale.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

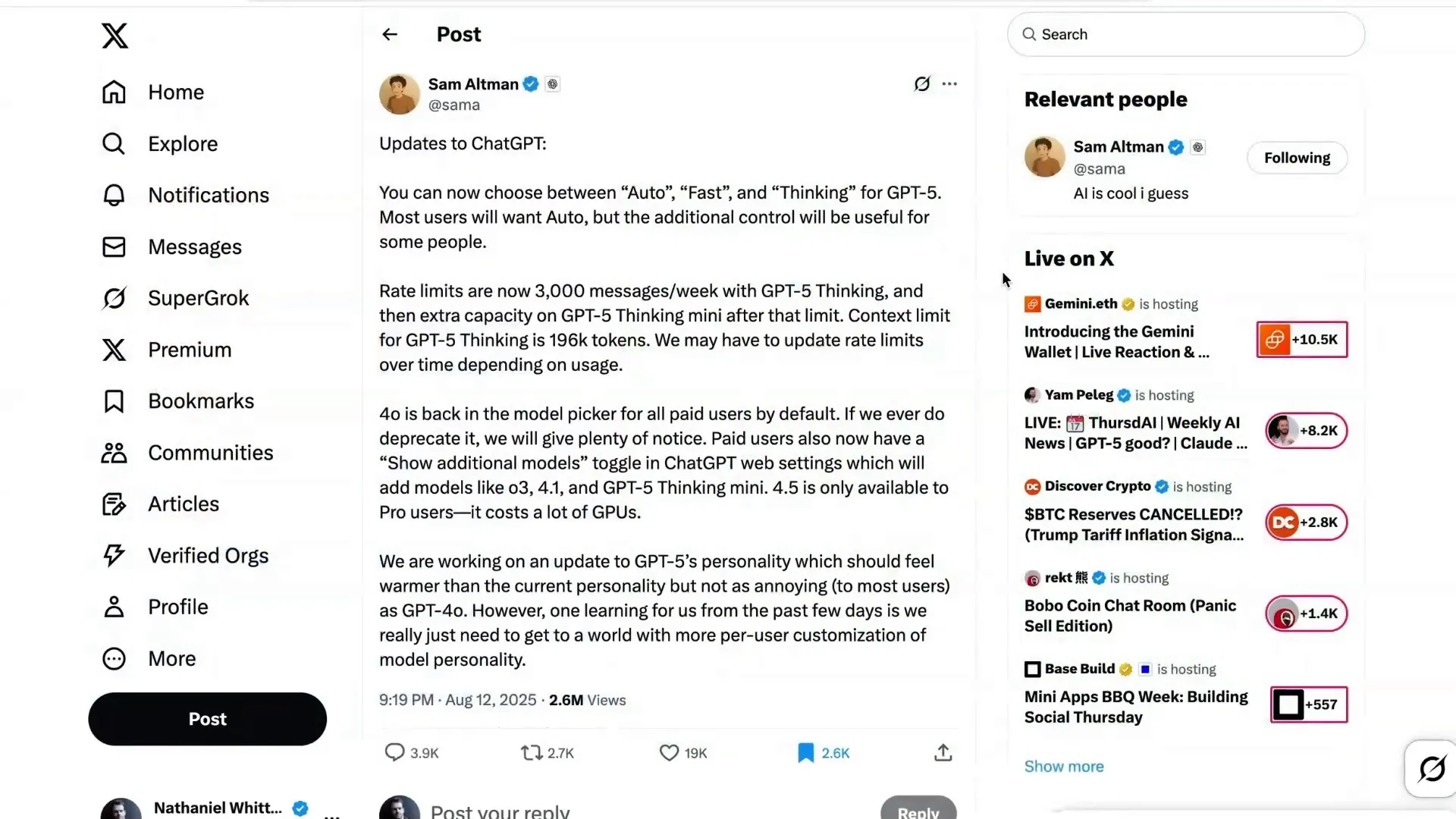

OpenAI’s product reversals and model selector comeback

OpenAI has been adjusting course in the wake of GPT-5’s rollout. After intense user feedback, it reinstated older model choices like GPT-4o and restored a version of the model selector. Sam Altman announced that users can now choose between modes such as auto, fast, thinking, mini, and pro — giving end users more control over tradeoffs between speed and deliberation.

"You can now choose between auto fast and thinking for GPT-5. Most people will want auto, but the additional control will be useful for some people."

This episode underscores the tension between simplifying product experiences for general users and preserving configurability for power users. In the context of AI in recruiting, power users — recruiters and hiring managers — often require predictable behavior and control. Removing options can break workflows, whereas exposing choices (with better UX) can let organizations tune models for fairness, sensitivity to tone, or conservatism in candidate evaluation.

Memory and personalization: table stakes

Another significant front is memory. Google rolled out automatic memory for Gemini so it can remember user preferences and prior conversations; OpenAI and Anthropic have made similar UX moves. Persistent memory is becoming essential — it forms a moat because it enables continuity across sessions and reduces repetitive setup.

For AI in recruiting, memory unlocks practical features:

- Candidate histories that persist across interviews and touchpoints

- Context-aware recommendations that recall prior assessments or actions

- Improved candidate experience through personalized follow-ups

I've personally found persistent memory saves immense time during multi-session workflows. If a recruiting assistant recalls previously uploaded portfolios, coding challenge outcomes, or interview notes, the recruiter doesn't have to reintroduce that context every time — and that compounds efficiency.

XAI departures and the safety conversation

Finally, leadership shifts sometimes reveal strategic emphasis. XAI’s co-founder Igor Babushkin left to start Babushkin Ventures, focusing on AI safety research and startups in agentic systems. He wrote about the importance of studying safety as models become more agentic and operate over longer horizons.

Safety is an explicit concern for AI in recruiting: tools that recommend or screen candidates must be auditable, fair, and compliant. When influential researchers pivot to safety-focused ventures, it accelerates the development of methods, tooling, and governance frameworks that recruiting teams will need when they scale AI-assisted hiring.

Practical implications and recommendations for teams

If you are responsible for adopting AI in recruiting, here’s how to think about the recent waves of announcements:

- Map use cases to context needs. For deep portfolio analysis or multi-document review, larger context windows simplify architecture. But for high-volume screening, smaller, cheaper calls with summaries may be more efficient.

- Invest in evaluation tooling. Make sure you can measure fairness, bias, and performance against hiring KPIs. The HumanLoop acquihire shows vendors are aware this is missing and are starting to close the gap.

- Plan for memory and continuity. Design candidate records so that the model has relevant context while honoring data minimization and consent.

- Watch pricing and optimize for scale. Usage that frequently leverages million-token contexts can be expensive; mix and match model calls intelligently.

- Keep humans in the loop. For decisions with legal or high-stakes consequences, preserve human review and clear audit trails.

Conclusion: bigger context, smarter workflows, and the path for AI in recruiting

Anthropic’s million-token context window is a clear signal that model capabilities continue to evolve rapidly, and so do the adjacent needs — pricing, tooling, memory, and governance. These developments move us closer to AI systems that can reason across long documents and multi-step tasks, which in turn unlocks richer possibilities for AI in recruiting.

But technology alone isn’t enough. The enterprise adoption curve will be driven by how well vendors provide observability, evaluation frameworks, cost-effective usage patterns, and memory that respects privacy and consent. For teams building recruiting workflows, that means prioritizing robust tooling and thoughtful integration — not just chasing the biggest context window.

As always, keep an eye on updates from the major vendors and evaluate solutions against the dual priorities of performance and responsible deployment. If you're exploring AI in recruiting, now is the time to prototype with a plan for measurement and human oversight — leveraging these new capabilities where they deliver clear, justifiable value.