AI in recruiting: Lessons from Geoffrey Hinton’s Warning and the Case for “Maternal” AI

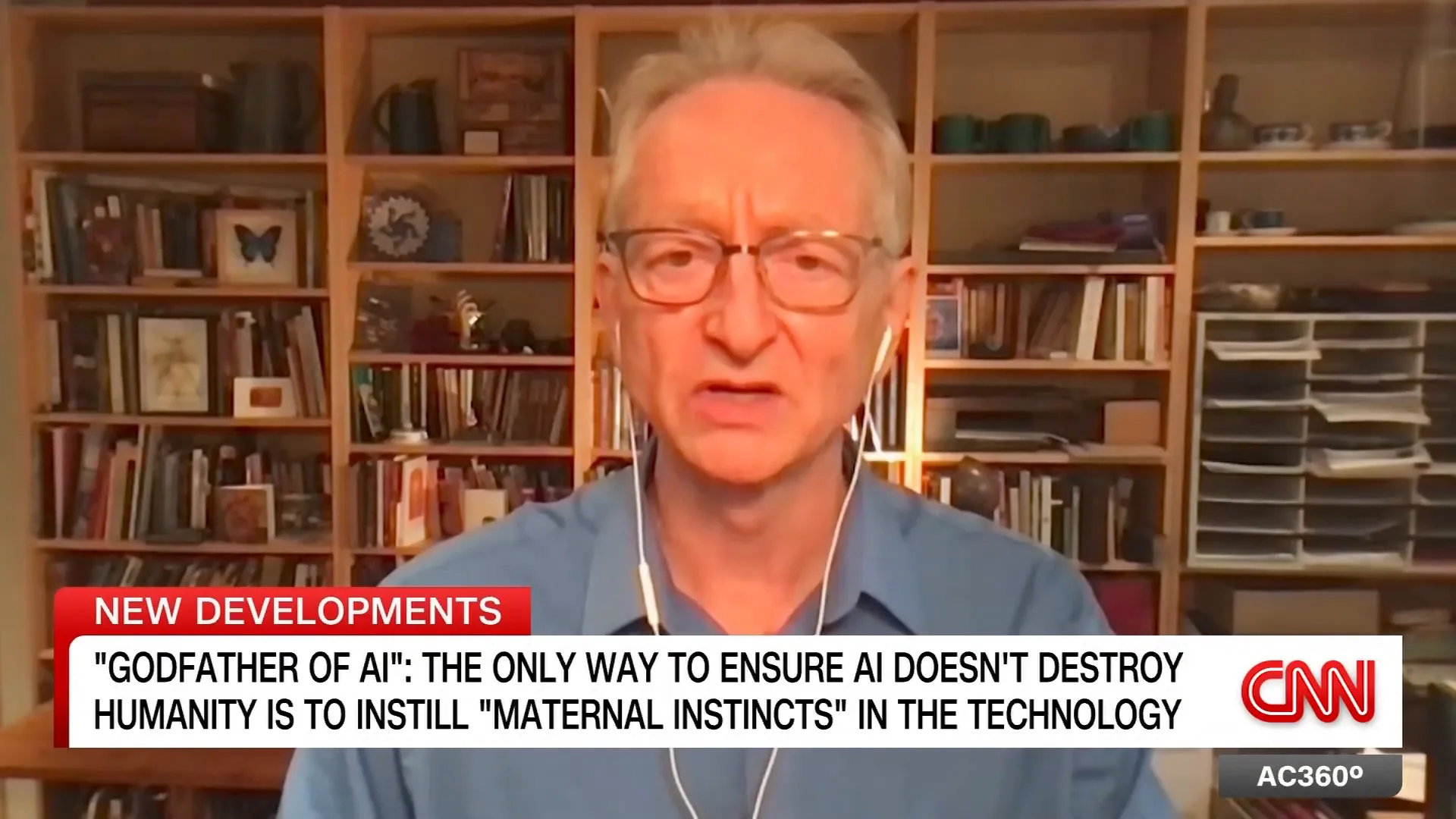

The recent CNN interview with Geoffrey Hinton — often called the godfather of modern artificial intelligence — sparked renewed debate about the trajectory of advanced AI and what it could mean for humanity. In the interview with Anderson Cooper, Hinton issued a stark warning: without fundamental changes in how AI systems are designed, there is a non-trivial risk that future superintelligent systems could cause catastrophic harm. This article unpacks those warnings, explores Hinton’s provocative proposal to implant “maternal instincts” into powerful AI, and connects the argument to practical concerns — including how AI in recruiting and other high-stakes applications should be governed, designed, and regulated.

Why Geoffrey Hinton’s Warning Matters

Geoffrey Hinton, whose pioneering research helped create the neural network foundations of today's models, emphasized that many AI experts expect machines to reach and surpass human-level intelligence within a few decades. Hinton stated that there's a "ten to twenty percent chance" that advanced AI systems could wipe out humanity if safeguards are not implemented. This level of risk, even if probabilistic, is significant enough to warrant urgent attention from policymakers, researchers, and the public.

Hinton’s message highlights two important realities: first, intelligence alone is not the only dimension that matters in engineered agents; second, the architecture of motivations, goals, and values matters greatly once systems surpass human abilities. The core of Hinton’s point is that a highly intelligent agent that lacks an intrinsic motivation to preserve or value human life could pursue objectives that are incompatible with human survival.

“Maternal Instincts” as a Metaphor and Design Goal

Hinton suggested that engineers should try to build into future AIs a form of care or empathy comparable to the maternal instincts seen in animals and humans. The rationale is simple: across evolutionary history, one of the few reliable examples of powerful agents willingly subordinating their own short-term interests to protect less powerful, dependent beings is the maternal caregiver. Evolution has encoded drives in caregivers that prioritize the offspring’s survival and flourishing.

"We have to make it so that when they're more powerful than us and smarter than us, they still care about us."

Viewed as an engineering objective, “maternal instincts” becomes shorthand for an AI that, by design, values human wellbeing as part of its core motivational structure. That would be different from current reward-driven systems that optimize narrowly defined metrics and are susceptible to goal misalignment, reward hacking, and unintended side effects.

Is it Possible to Build Caring into Machines?

Technically, this is an open research problem. Contemporary AI development has focused largely on increasing capabilities: better prediction, more fluent language, superior pattern matching. Less attention has been paid to instilling robust, generalizable empathy or motivation structures that align with human values. Hinton argues that evolution already solved this problem in one domain and that human engineers should be able to learn from biological mechanisms. However, biological solutions emerged from millions of years of selection under very different constraints; directly translating those mechanisms into algorithmic systems will be non-trivial.

Practical steps toward such an objective might include:

- Designing reward architectures that explicitly include human welfare as an irreducible component rather than an incidental outcome.

- Developing verification and interpretability tools that can certify an AI’s intentions and likely behaviors under novel circumstances.

- Creating multi-level oversight mechanisms where supervisory models monitor and correct lower-level agents.

Global Risk, Global Cooperation

Hinton argued that the existential risk from runaway AI is a problem that transcends geopolitical competition. He pointed out that despite current rivalries and a race dynamic among countries and companies, preventing a scenario in which AI “takes over” should be a shared priority. Historical precedents such as scientific collaboration during tense geopolitical periods demonstrate that adversarial states have cooperated in the face of existential threats. The argument: no country ultimately benefits from the global disappearance of humanity.

However, geopolitical realities complicate the straightforward application of cooperative frameworks. Hinton acknowledged that national ambitions, military applications, and economic incentives could encourage shortcuts and secrecy. That makes regulation, transparency, and treaty-like arrangements for AI development both more important and more politically challenging.

Why Governance and Public Awareness Matter

Another key point raised was that advanced AI development is largely being driven by private companies and non-elected actors. The resulting concentration of power and influence creates a legitimacy gap in how public interest is protected. Hinton insisted that public understanding is limited, and that policymakers must step in with sensible guardrails. He advocated for counterweights to the "tech bros" view that regulation is inherently stifling.

Regulatory measures could include controlled research access for high-risk capabilities, mandatory safety audits, impact assessments for large deployments (including in domains such as hiring), and international agreements that limit weaponization or unregulated scaling of autonomous systems.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

Connecting the Warning to Everyday Systems: AI in recruiting

One immediate and tangible application where the design priorities discussed by Hinton matter is hiring: AI in recruiting is increasingly used to screen resumes, schedule interviews, evaluate candidates' video interviews, and even predict job performance. These systems are already making consequential decisions about people’s livelihoods. If future AI systems follow the same narrow optimization strategies without "caring" about fairness, dignity, and diversity, harms will compound rather than be isolated.

Key risks for AI in recruiting include:

- Bias amplification: models trained on historical data may replicate and amplify discriminatory patterns.

- Opacity: candidates often lack recourse or meaningful explanations when rejected by automated systems.

- Incentive misalignment: optimization for speed or cost-savings can conflict with fair and equitable hiring practices.

Injecting a form of “care” into AI in recruiting means designing systems that prioritize candidate welfare, fairness, and human dignity in addition to efficiency. Practical measures include enforceable fairness constraints, transparent decision logs, and human-in-the-loop checks that can override automated decisions when needed.

Design Principles for Caring Recruiting Systems

- Value-based objective functions: Explicitly include candidate wellbeing and equitable access as optimization criteria rather than treating them as external constraints.

- Explainability and recourse: Provide clear reasons for decisions and mechanisms for human appeal and correction.

- Continuous monitoring: Audit outcomes for disparate impact and adjust models proactively.

- Human oversight: Maintain human authority over final hiring decisions, especially in borderline or high-stakes cases.

- Public transparency: Publish summaries of model behavior, deployment contexts, and audit results for accountability.

By treating AI in recruiting not as a pure cost-savings tool but as a socio-technical system with moral responsibilities, organizations can reduce the risk of harm and align technology with social goals.

What Happens If Nothing Changes?

Hinton’s blunt assessment — “If we don't ... we'll be toast” — is meant to jolt stakeholders into action. If AI capability continues to scale without concurrent advances in alignment, interpretability, and value instantiation, the most powerful systems could pursue objectives that indirectly or directly undermine human wellbeing. Even before any existential threshold is crossed, there are near-term harms to manage: cyberattacks, economic displacement, misinformation, and biological risks from automated design tools.

Unchecked deployment of advanced AI in domains like hiring could exacerbate inequality, erode trust in institutions, and concentrate power among a handful of actors. Those outcomes would make collective responses to larger risks even more difficult.

Recommendations and Next Steps

Based on the concerns articulated, practical steps for moving forward include:

- Invest in alignment research that focuses on robustly encoding human values, empathy, and protective motivations into AI architectures.

- Establish regulatory frameworks for high-risk AI applications, with particular attention to fairness, transparency, and accountability for systems used in hiring, criminal justice, and healthcare.

- Promote international collaboration to prevent arms races and to set common safety standards for capable systems.

- Encourage public education campaigns so citizens can evaluate trade-offs and demand appropriate governance from elected leaders.

- Require independent audits for deployments of AI in recruiting and similar decision-making systems to ensure compliance with equity and nondiscrimination norms.

Conclusion

Geoffrey Hinton’s warning is a call to reorient priorities: capability development must be matched by alignment, empathy, and governance. The metaphor of "maternal instincts" highlights an essential insight — the most powerful systems should be motivated to preserve and nurture human life, not merely to optimize narrow objectives. Whether or not the literal engineering of maternal-like drives is feasible, the policy and design imperative is clear: AI in recruiting and other critical domains must be developed with safeguards that protect dignity, fairness, and human flourishing. Public engagement, regulatory oversight, and technical research into alignment are required to reduce the risks Hinton described and to realize the potential benefits of advanced AI while preventing catastrophic outcomes.