Is GPT-OSS Actually Any Good? Exploring the Reality of OpenAI's New Open Source Model and Its Implications for AI in Recruiting

The release of OpenAI's new open source model, GPT-OSS, has stirred a whirlwind of excitement and scrutiny across the AI community. From breathless social media posts to detailed independent analyses, the model's debut has triggered a lively conversation about its real-world performance and potential applications. As someone deeply engaged in the AI space, I want to take you on a deep dive into what people are really saying about GPT-OSS—especially as it relates to practical fields like coding, STEM, and yes, even AI in recruiting.

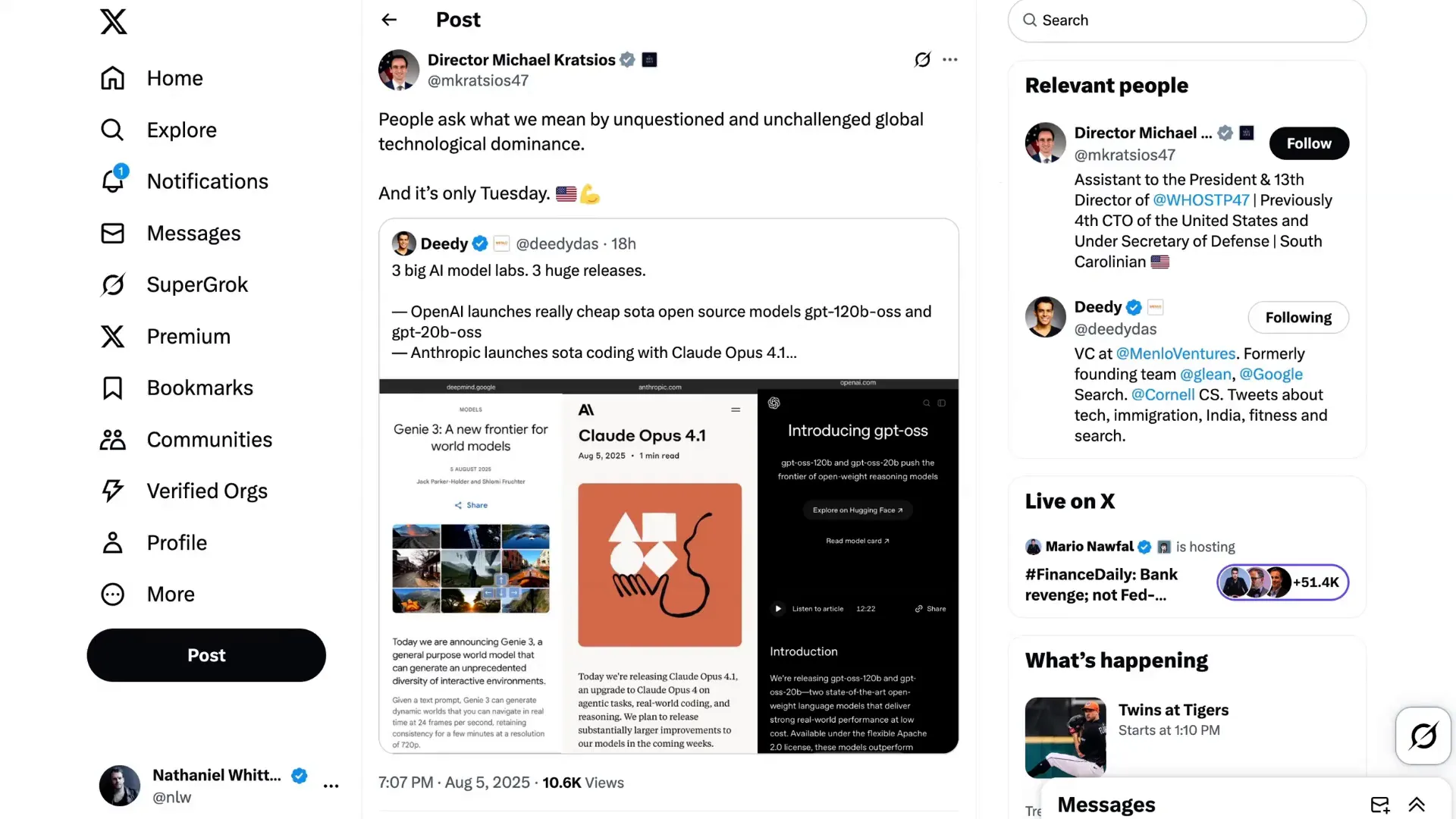

In this article, we’ll unpack the early reactions, dissect the model’s strengths and limitations, and consider how it stacks up against other open source models, including those coming out of China. Along the way, we’ll also touch on complementary breakthroughs like Google's Genie 3 world simulation model, which is reshaping expectations for interactive AI environments. By the end, you’ll have a clear-eyed perspective on GPT-OSS and where it fits in the evolving AI ecosystem.

The Initial Buzz: Speed and Efficiency Shine Bright

When GPT-OSS was first announced, the AI community was abuzz with enthusiasm. Dharmesh Shah, founder of HubSpot, was one of many who excitedly shared how they were running the model seamlessly on MacBook Pros—a testament to its accessibility and efficiency.

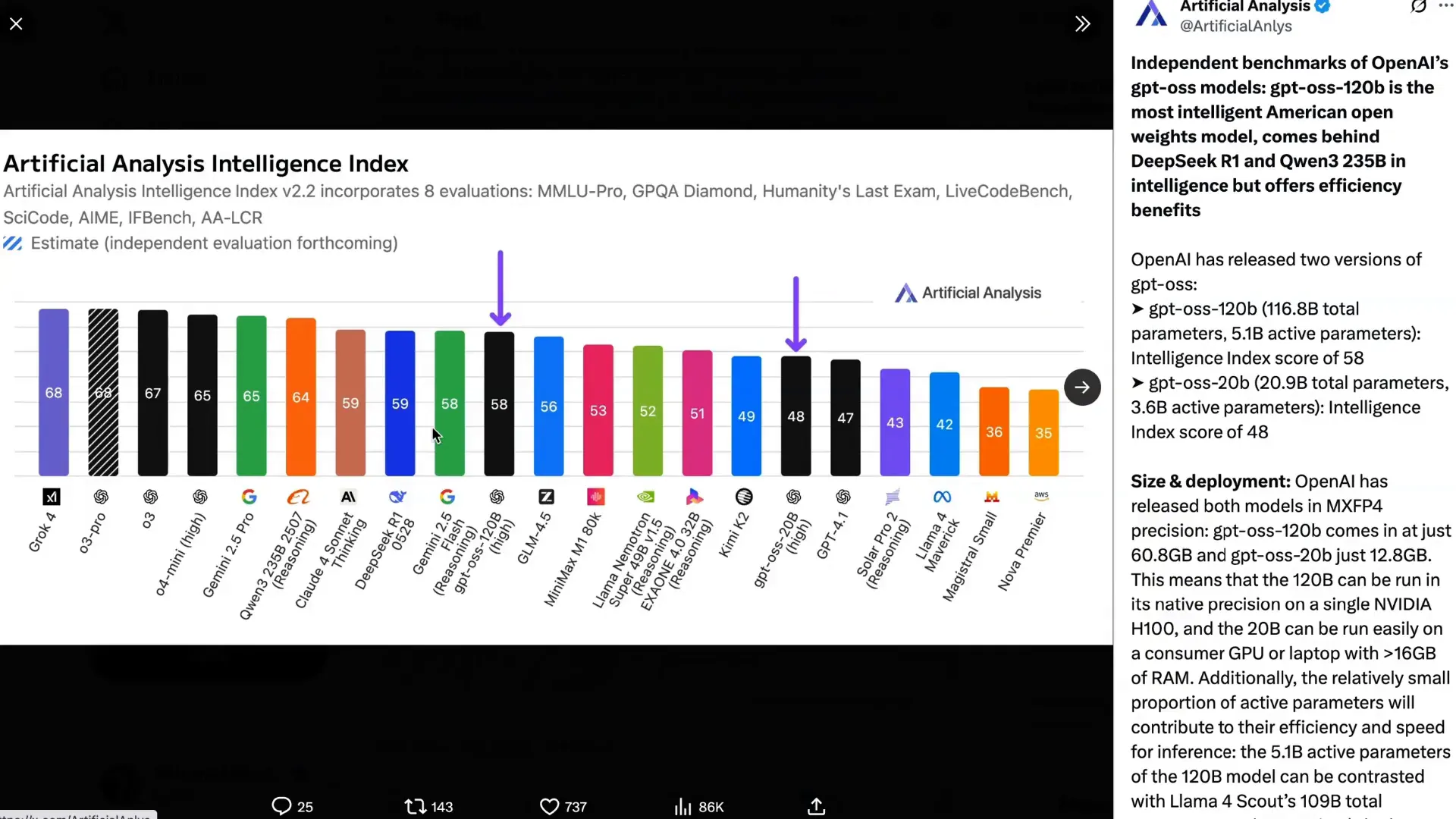

Early benchmarks reported by OpenAI themselves suggested that GPT-OSS delivered impressive intelligence and speed at a lower cost than many existing models. Independent benchmarks, such as those from Artificial Analysis, placed GPT-OSS 120B as the smartest American open weights model available, albeit trailing behind some Chinese models like DeepSeeker 1 and Qwen 3 235B in raw intelligence scores.

To put numbers on it, GPT-OSS 120B scored 58 on the Artificial Analysis Intelligence Index, just a point shy of R1 (59) and behind Qwen 3 (64) and GPT-3 (67). While this gap is notable, the efficiency benefits—faster inference times and lower operational costs—make it attractive for many practical applications.

Beyond the Surface: Uneven Performance and Safety Constraints

However, as the initial excitement settled, more nuanced and sometimes critical voices began to emerge. Ahmad Mostock, formerly of Stability AI, questioned whether GPT-OSS was “entirely mid trained,” noting that while it performed well for its size, it sometimes behaved oddly or “fried.”

Other users echoed this sentiment, describing GPT-OSS as a "very jagged" and "uneven" model. Some suggested it felt like it was trained heavily on synthetic data, with safety mechanisms maxed out, leading to responses that seemed overly cautious or bland. This has raised questions about the trade-offs OpenAI made between safety, usability, and general intelligence.

One recurring theme was the model’s difficulty with tool calling—a critical feature for agent builders who prioritize security, privacy, and controllability. Without robust tool integration, GPT-OSS’s utility for complex, multi-step tasks could be hindered, limiting its appeal for certain developers.

Focused Strengths: Coding, Math, and STEM, But at a Cost

Several analysts have observed that GPT-OSS appears optimized for specific domains—namely coding, mathematics, and STEM fields—while sacrificing broader language capabilities and creative tasks. For instance, Bjorn Pluster noted the model's “very blatant” incapacity to produce linguistically correct German, highlighting weaknesses in multilingual support and general output quality.

Phil111 on Hugging Face went further, describing GPT-OSS as “unbelievably ignorant” compared to similarly sized models like Gemma 3.2B and Mistral Small 24B. He suggested that OpenAI deliberately stripped broad knowledge from the model to restrict it to niche use cases, thereby protecting their paid ChatGPT service from competition.

This strategic narrowing might make sense from a business perspective, especially considering that the primary users of GPT-OSS are likely those with significant data security and privacy needs—developers and organizations wanting to bypass third-party hosted models. These users are less interested in poetry or general knowledge queries and more focused on reliable coding and technical problem-solving.

How Does GPT-OSS Compare to Chinese Open Source Models?

One of the most hotly debated topics is how GPT-OSS stacks up against the wave of powerful open source models emerging from Chinese AI labs. Before GPT-OSS’s release, many experts, including Martin Casado from a16z, pointed out that numerous US startups were building on Chinese open source models. They called for a national priority to boost domestic AI development.

Simon Willison, a respected AI commentator, noted that Chinese models like Kwen, Moonshot, and z.ai had “positively smoked” Western competitors in July. However, with GPT-OSS’s debut, he tentatively suggested that OpenAI might now be offering the best available open weights model worldwide—though he awaited more credible benchmark results.

Contrastingly, Ram Jawed and others reported that GPT-OSS underperformed compared to Chinese models in one-shot tasks, especially in coding. DAX highlighted that on coding benchmarks, GPT-OSS was not yet close to the performance of recent Chinese releases.

Hassanal Gib bluntly stated there is currently no Western open source model that can beat or even tie the best Chinese models, emphasizing the competitive gap. Ethan Malek also raised concerns about OpenAI’s incentives to continuously update and maintain the lead, suggesting the current advantage might be a one-off.

Optimism Amid Challenges: The Beauty of Open Source AI

Despite the critiques, many voices remain optimistic about what GPT-OSS represents for the open source AI ecosystem. Nathan Lambert described the launch as a “phenomenal step for the open ecosystem,” especially for Western countries and their allies.

He acknowledged the many “weird little failures” typical of open models and cautioned that it may take weeks before the community fully extracts the model’s potential. The “pain and beauty of open source,” as Matrix Memories put it, lies in confronting edge cases and imperfections head-on—something proprietary models often mask.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

Nathan also pointed out the broader geopolitical context, noting how China is doubling down on open model releases as a strategic move. OpenAI’s return to open source could catalyze a turning point for adoption and impact, but the sustainability of this momentum remains uncertain.

Speed, Cost, and Efficiency: Hidden Advantages

One of the less discussed but crucial aspects of GPT-OSS is its remarkable speed and efficiency. Early research indicates the model runs extraordinarily fast and at a fraction of the cost compared to many competitors. While current users might prioritize raw performance regardless of cost, this advantage could become critical as workloads grow more complex and token consumption skyrockets.

This efficiency could make GPT-OSS particularly attractive in fields like AI in recruiting, where rapid, cost-effective processing of large volumes of candidate data is essential. The balance between performance and operational expense is a key factor that recruiters and developers alike will consider in adopting AI tools.

Beyond GPT-OSS: The Rise of Google’s Genie 3 World Model

GPT-OSS wasn’t the only headline AI release recently. Google’s new Genie 3 world simulation model also dropped, and the reception has been nothing short of rave. A Twitter poll I conducted showed a nearly even split in excitement between GPT-OSS and Genie 3, which is notable given OpenAI’s usual hype machine.

Genie 3 enables real-time playable simulations generated from simple text prompts, impressing experts from DeepMind, a16z, and beyond. It’s being hailed as a breakthrough in interactive AI environments, with many calling it a potential “AGI moment” for AI-generated video and world simulation.

This technology opens up new frontiers, moving AI from merely automating existing human tasks to creating entirely novel experiences—an exciting shift that could eventually influence fields like gaming, training simulations, and even AI in recruiting by enabling immersive candidate assessments or virtual job tryouts.

The Road Ahead: Opus 4.1 and GPT-5 on the Horizon

Lastly, we can’t ignore the developments from Anthropic, who released Opus 4.1 amidst this flurry of announcements. While some feel it was a rushed release, others praise its improved design sense, especially for coding applications.

Pricing and token limits remain sticking points, leaving questions about its accessibility for daily use. However, the bigger picture is that this week’s announcements are just the appetizer for what’s coming next—namely GPT-5, which is anticipated imminently and may redefine the competitive landscape again.

For now, GPT-OSS represents a significant, though imperfect, milestone in the open source AI journey. It’s a model that invites community engagement, iterative improvement, and thoughtful application, particularly in specialized domains like coding, STEM, and AI in recruiting.

Conclusion: A Step Forward, With Eyes Wide Open

GPT-OSS is a fascinating blend of promise and pragmatism. It’s fast, efficient, and optimized for specific technical tasks, making it an appealing tool for developers and organizations with stringent privacy and security demands. Yet, it is far from a perfect or universal solution, exhibiting limitations in multilingual support, general knowledge, creative tasks, and tool integration.

Its release marks OpenAI’s renewed commitment to the open source ecosystem, a move that could shift the balance in the global AI race—especially as Chinese models continue to evolve rapidly. However, the sustainability of this lead depends on ongoing updates, community engagement, and clear incentives.

For professionals interested in AI in recruiting, GPT-OSS’s strengths in coding and reasoning might translate into smarter, more secure candidate screening tools and workflow automation. But its current limitations also underscore the importance of carefully evaluating AI tools for the specific demands of recruiting, where nuance, creativity, and broad knowledge often matter.

As the AI landscape accelerates—with models like Genie 3 changing how we simulate and interact with digital environments—the future holds exciting possibilities. Staying informed, critical, and open to experimentation will be key to harnessing these advances effectively.

What are your thoughts on GPT-OSS and its role in the open source AI movement? Have you experimented with it in AI in recruiting or other fields? Share your experiences and insights in the comments below. Let’s keep the conversation going.