Jul 9, 2025

The AI Boom’s Multi-Billion Dollar Blind Spot: What It Means for AI in Recruiting and Beyond

Artificial intelligence has been heralded as the next great leap in technology, promising smarter, more intuitive systems capable of reasoning and solving complex problems. This surge in AI innovation, especially in reasoning models, has ignited massive investments and industry-wide excitement. But beneath the hype, troubling signs are emerging that challenge the fundamental assumptions about AI’s intelligence and its trajectory toward superintelligence. This article explores the growing skepticism around AI reasoning models, their limitations, and what this means for sectors relying on AI, including the field of AI in recruiting.

Introduction: Betting Big on Smarter AI

We are at the dawn of the reasoning AI era. The promise is clear: smarter models with sharper intuition that can think, reason, and solve problems step-by-step, much like humans do. Industry leaders envision a future where superintelligence—a system smarter than any human—is within reach, driven by a century-long trend of technological advancement.

To fuel this vision, companies are pouring billions into AI development, pushing computational demands to unprecedented levels. It is estimated that the computation required for these reasoning models is now a hundred times greater than what was necessary just a short time ago. The AI market is booming, with around 600 active real-world use cases today, a number expected to double or even triple within the next year.

However, amid this excitement, new research is casting doubt on whether these reasoning models are truly delivering on their promise or if they are an overhyped illusion. Are these AI systems genuinely getting smarter, or are they merely performing pattern matching and memorization at scale?

Is Reasoning AI Overhyped? The Illusion of Thinking

Reasoning AI is considered the next frontier beyond traditional chatbots that simply predict words. These models are designed to “show their work,” breaking problems into logical steps, planning actions, and reflecting on answers before finalizing them. This approach mimics human thinking, where reflection and multi-step reasoning improve outcomes.

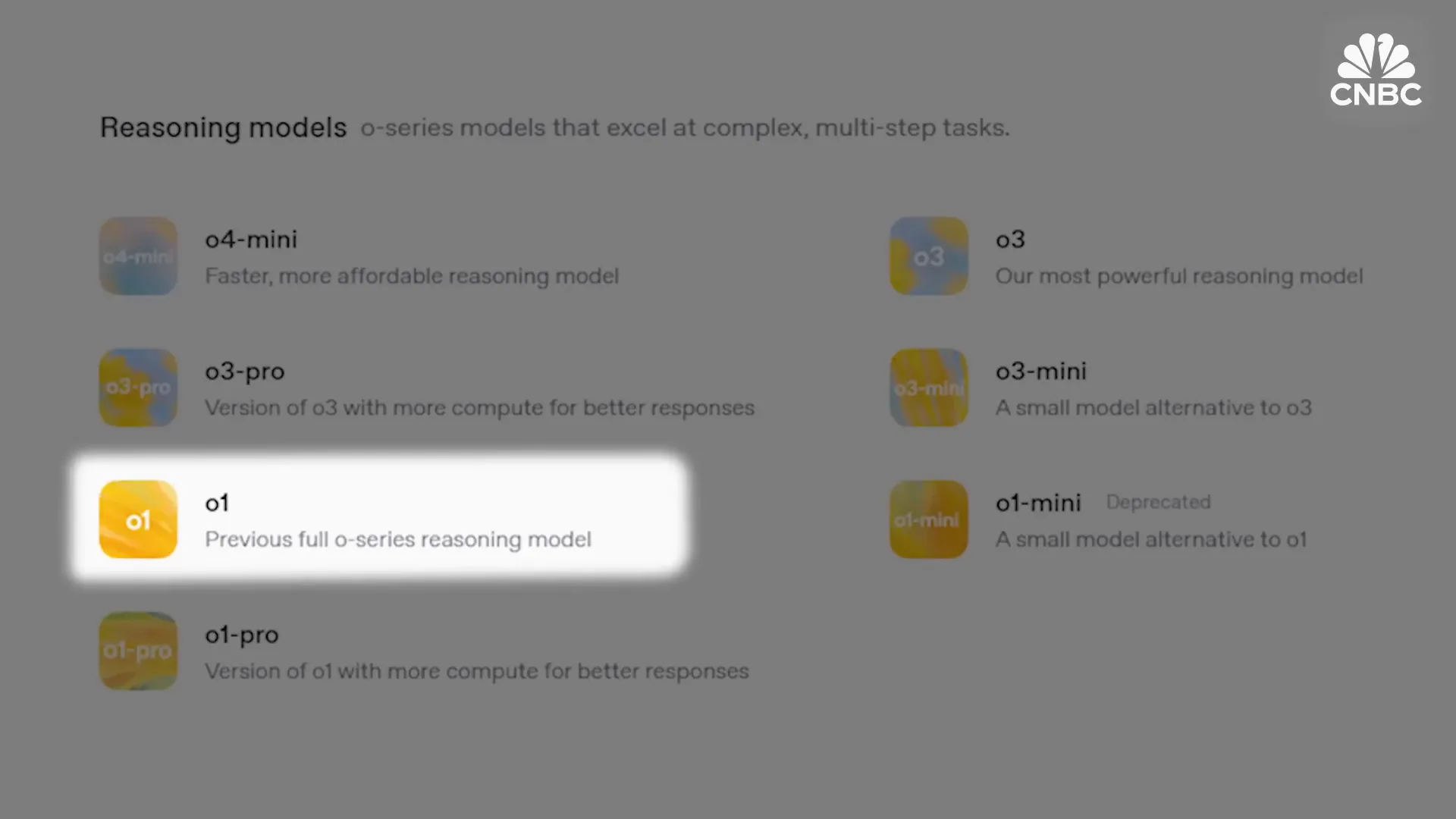

For example, newer AI models like GPT-4o and its iterations attempt to get answers right on the first try without revising, whereas models like o1 preview incorporate reflection, thinking through multiple potential solutions before delivering a final response. This “chain of thought” technique has become a popular trend among major AI developers, including OpenAI, Anthropic, Google, and DeepSea, with each new model promising enhanced reasoning capabilities.

Yet, a series of recent studies, including a high-profile paper from Apple titled The Illusion of Thinking, have raised red flags. The research shows that once AI reasoning tasks get complex enough, these models fail dramatically, calling into question their true reasoning abilities.

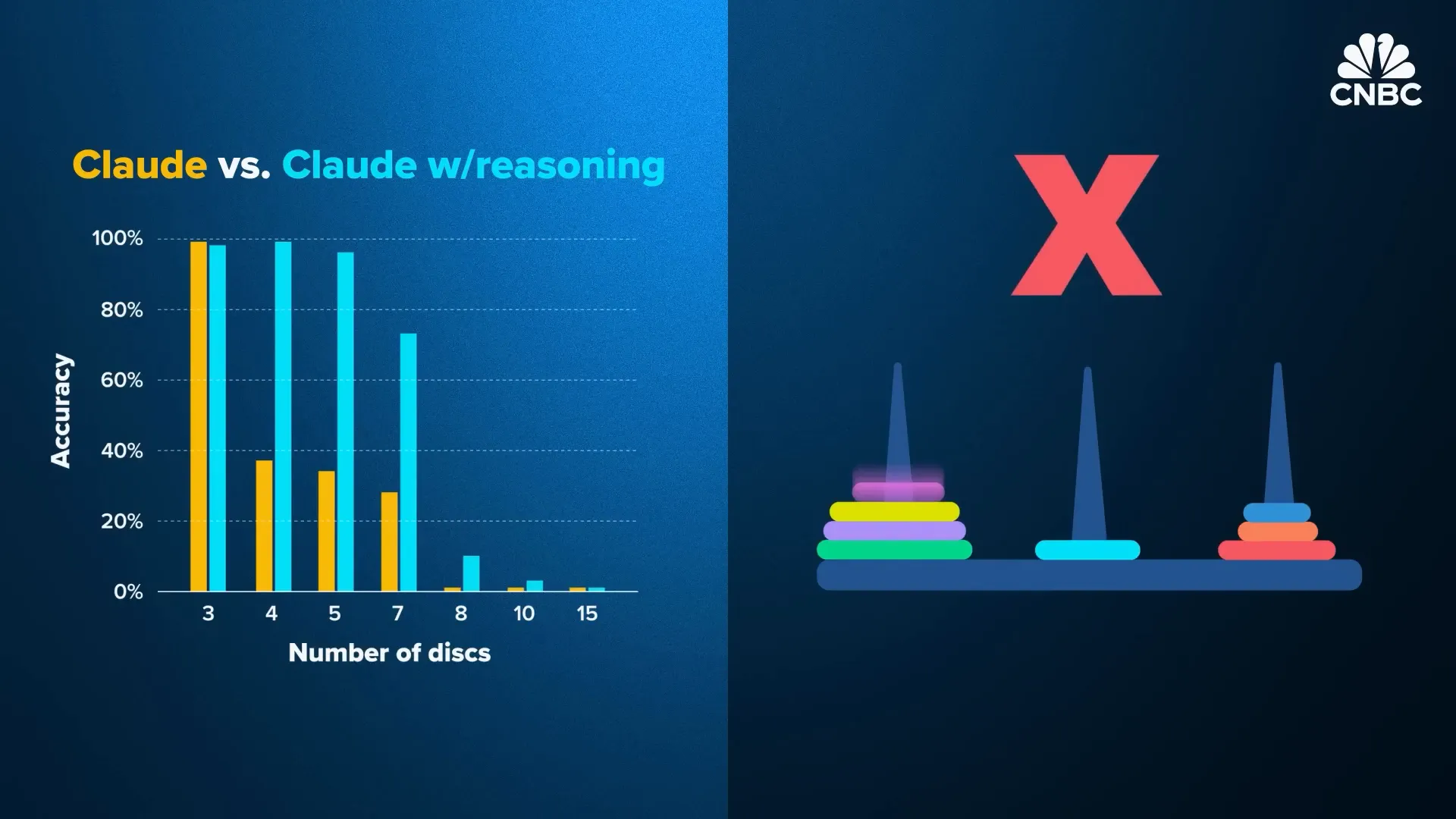

Apple’s team used the classic Towers of Hanoi puzzle—a cognitive skill-building task involving moving discs between rods with rules—to test AI reasoning. While AI models performed adequately with simpler versions (three discs), performance plummeted to zero accuracy with seven discs or more, a collapse seen across models from Anthropic, DeepSea, and OpenAI.

Similar failures occurred in other logic puzzles like checkers and river crossing problems. This suggests that what looks like intelligence might just be memorization of patterns encountered during training rather than genuine problem-solving skills.

The Challenge of Generalization

A key issue highlighted by Apple and other AI researchers is the lack of generalization. These models excel on familiar problems they have seen before but falter when faced with new, complex, or untested scenarios. This “jagged intelligence,” as Salesforce terms it, exposes a significant gap between current AI capabilities and real-world enterprise needs.

Anthropic, a leading AI developer, echoed these concerns in their paper Reasoning Models Don’t Always Say What They Think, while China’s LEAP research lab noted that current training methods fail to elicit truly novel reasoning abilities. In essence, AI today can ace benchmarks and specific tasks but struggles with everyday common-sense reasoning that humans perform effortlessly.

This limitation means that AI models must be retrained repeatedly for each new problem type, undermining the vision of a versatile, adaptable reasoning AI. Instead, we may be entering an era of specialized AI models designed for narrow tasks but incapable of the flexible intelligence needed for broader applications.

The AI Trade at Risk: What This Means for Investors and Businesses

The AI industry’s foundational belief is that scale leads to intelligence. The more data a model is fed and the larger its architecture, the smarter it becomes—a principle known as the “scaling law.” This empirical regularity has driven massive investment in AI compute infrastructure, benefiting chipmakers like Nvidia and cloud providers.

However, when models stop improving despite increased scale, the industry hits a wall. Such a moment occurred around November 2024, sparking an existential crisis marked by debates over stalled progress and geopolitical competition, particularly with China catching up. This downturn affected public AI stocks, with Nvidia entering correction territory.

While some industry leaders, like OpenAI’s Sam Altman and Nvidia’s Jensen Huang, remain optimistic about scaling laws continuing, others acknowledge uncertainty. The expectation was that reasoning models would be the breakthrough to escape this plateau, justifying renewed spending and keeping the AI trade vibrant.

But if reasoning models fail to scale effectively, this could undermine the entire infrastructure boom and force investors to question the return on their AI investments. The massive computational demands predicted for reasoning AI may not translate into proportional advances in intelligence.

Corporate America’s High Stakes on AI Reasoning

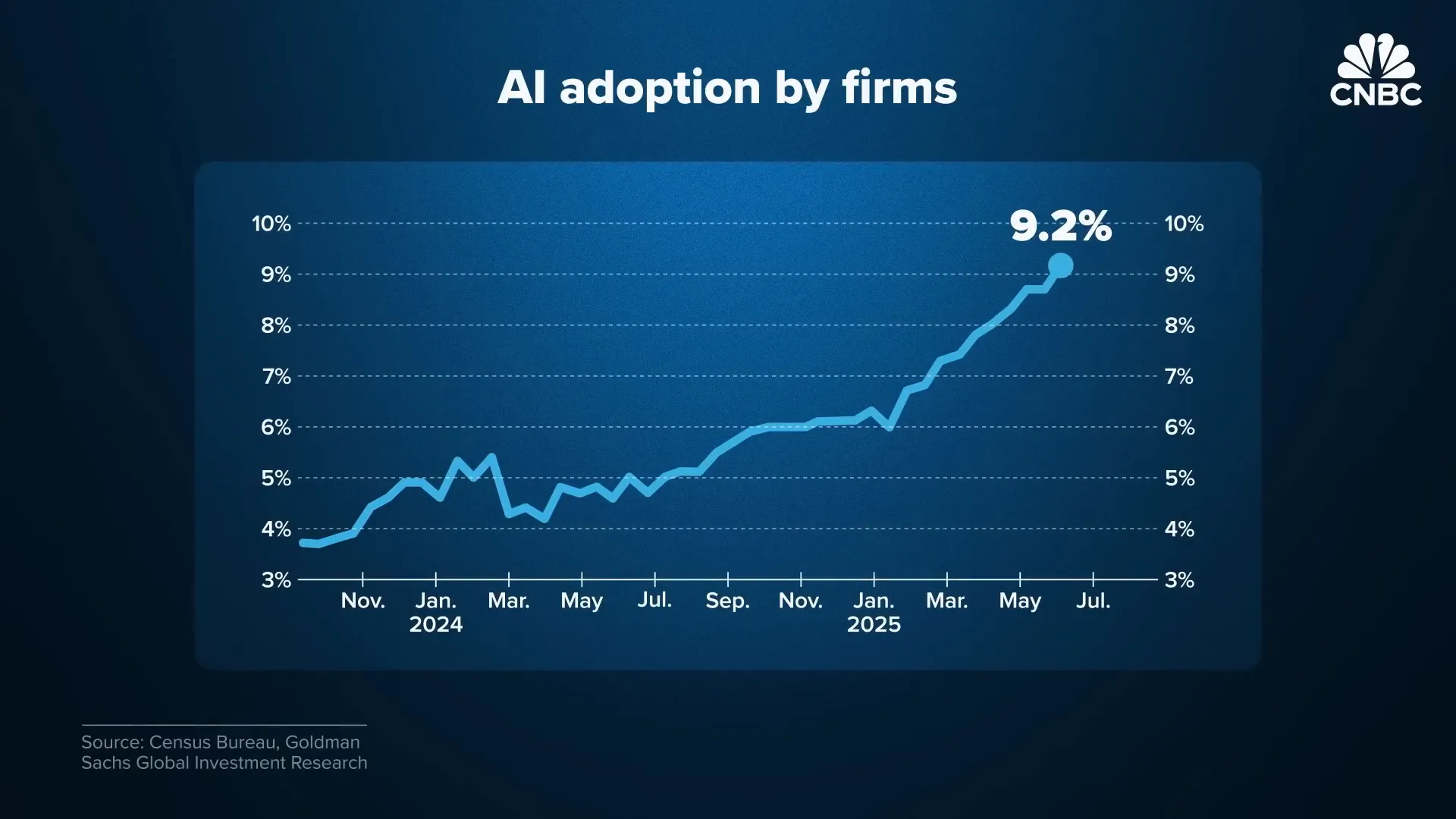

Beyond tech giants, many enterprises have jumped on the AI bandwagon, betting billions on the promise that AI will transform their businesses. The adoption of AI has accelerated rapidly, driven by the belief that it will revolutionize operations—even if the immediate benefits remain unclear, as JPMorgan CEO Jamie Dimon has noted.

For fields like AI in recruiting, these developments raise critical questions. AI tools designed to screen resumes, assess candidates, and optimize hiring workflows rely heavily on reasoning capabilities. If current AI reasoning models cannot generalize well or solve complex new problems, their effectiveness in dynamic, real-world recruiting scenarios may be limited.

In this context, the recent white paper from Apple landed like a cold splash of reality. While some criticized Apple for shifting the narrative as it tries to catch up in AI, the challenges they highlight are hard to ignore. Other researchers have responded, with Anthropic publishing a counter-paper, but the broader message remains: the timeline to true artificial general intelligence (AGI) may be much longer than anticipated.

The Superintelligence Illusion: How Far Are We Really?

The holy grail of AI remains artificial superintelligence—a system that can reason, adapt, and think beyond its training, surpassing human intelligence. Yet, the current evidence suggests that we are still many years away from this milestone, requiring breakthroughs that have yet to materialize.

While AI will continue to impact everyday life and specialized tasks meaningfully, the overarching narrative of imminent superintelligence is facing a reality check. The industry may have been chasing narrow benchmarks and shallow reasoning capabilities rather than the deep, flexible intelligence needed for AGI.

This has profound implications for the future of AI development, investment, and deployment, especially in industries like recruiting where nuanced understanding and adaptability are crucial.

The Race for AGI and Its Industry Consequences

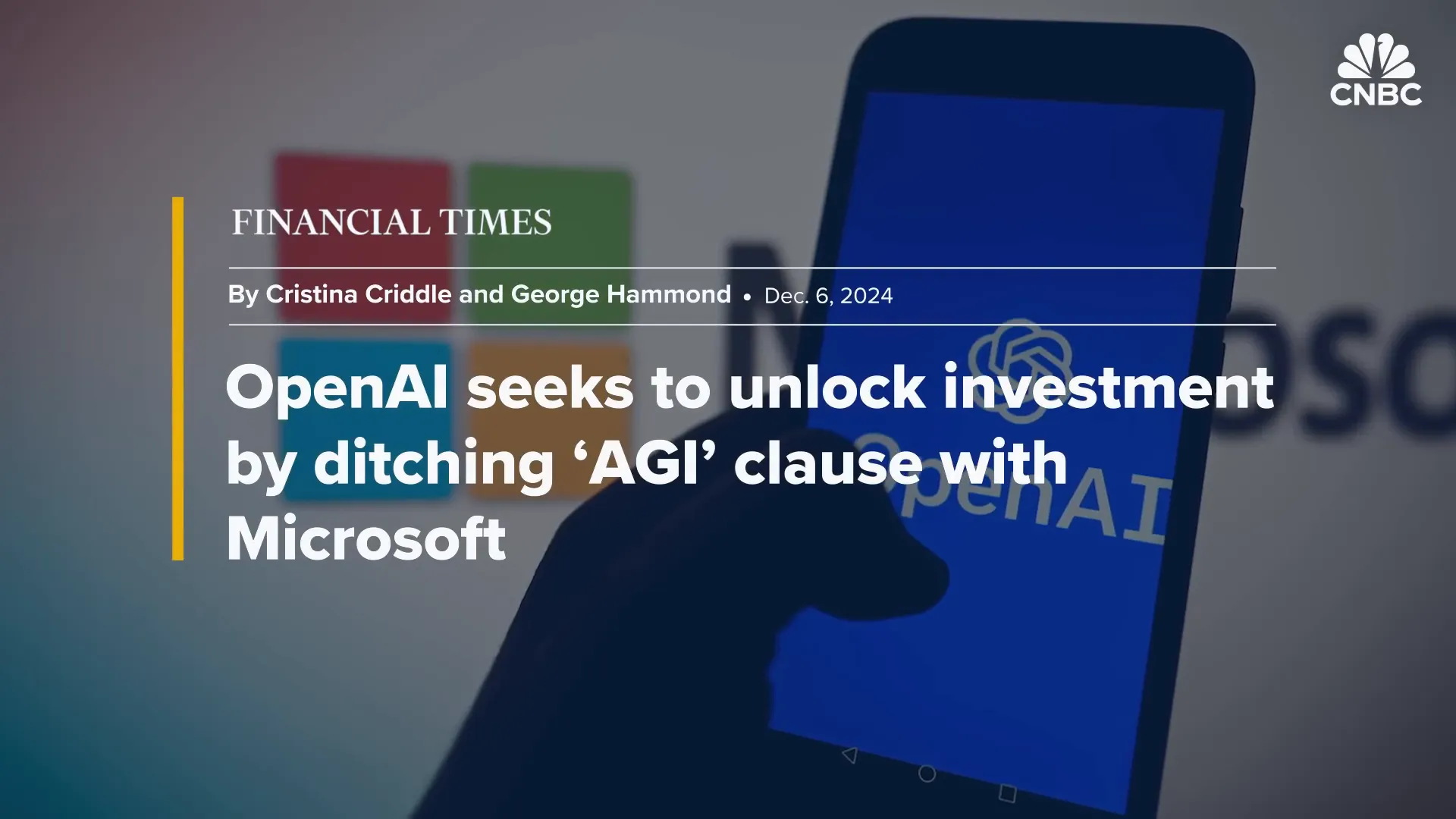

The pursuit of AGI is not just a technical challenge but a strategic one. Major players like OpenAI, Microsoft, SoftBank, and Meta are racing toward this goal, with significant stakes involved. OpenAI’s partnership with Microsoft, for instance, includes a clause that ends their collaboration once AGI is declared, underscoring how the definition of intelligence shapes control over AI’s future.

Reasoning models were expected to be the bridge to AGI. Instead, they may serve as a reminder of the distance still to travel. For businesses and investors, this means tempering expectations and preparing for a more diverse AI landscape—one where specialized, narrow AI coexists with ongoing research toward general intelligence.

Conclusion: Rethinking AI’s Path Forward

The AI boom has been fueled by the promise of smarter, reasoning machines capable of transforming industries, including recruitment. Yet, recent research reveals a multi-billion dollar blind spot: current reasoning models may not be as intelligent or generalizable as once believed. Instead, they often rely on memorized patterns and fail under complex, novel challenges.

This gap between hype and reality calls for a reassessment of AI’s capabilities and timelines. While AI will continue to deliver value in specific applications, the dream of superintelligence remains distant, requiring breakthroughs we have yet to achieve. For enterprises and investors, this means balancing enthusiasm with caution and recognizing that the AI revolution might unfold more gradually and diversely than expected.

Understanding these challenges is essential for anyone involved in AI-driven fields, especially AI in recruiting, where the stakes of intelligent, adaptable systems are high. The future of AI is bright, but it demands patience, innovation, and a clear-eyed view of what current technology can—and cannot—do.