Nvidia Pays Up to Access China Markets — What It Means for AI in Recruiting and Beyond

Nvidia and AMD have reportedly agreed to give the U.S. government a cut of their Chinese chip sales in exchange for export licenses. In this article I’ll walk through the deal, the pushback, the product and competitive shifts at NVIDIA, Microsoft/GitHub, and Anthropic, and why these shifts matter even to everyday HR teams and practitioners thinking about AI in recruiting.

What happened: a short, weird chronology

At the center of the story is a negotiation that reads like a political thriller. Jensen Huang of NVIDIA engaged directly with President Trump over whether the company could export high-end H20 AI chips into China. Initially there were promises of large U.S. investments. Then, after a surprise White House block and back-and-forth diplomacy involving Chinese leaders, the reported result was that NVIDIA—and later AMD—agreed to hand over a percentage of revenues from Chinese sales to obtain export licenses.

Reports indicate that a starting demand of 20% was negotiated down to 15%. Some back-of-the-envelope math suggests NVIDIA might have sold roughly $23 billion worth of chips into China this year without tighter export controls, which would translate into around $3.5 billion flowing to the U.S. government annually at a 15% rate. NVIDIA’s official response was careful: they did not deny following government rules and said they hope export controls will allow U.S. tech to compete globally.

Why this is unprecedented

Export control experts point out that no U.S. company has historically agreed to give a portion of revenue to secure export licenses. Critics see this as a dangerous new precedent: a direct financial stake for the government tied to relaxing national security controls. That's why voices across the political spectrum reacted strongly. China experts warned that this looks like turning export licenses into revenue-stream opportunities, while others called it corporatism or even an extortion-style model of sovereign risk.

At the same time, some Wall Street analysts framed the agreement as a predictable, modelable cost that replaces the binary risk of a full ban—making the China AI market less headline-driven and more predictable for investors.

Political, security, and economic reactions

The range of reactions is wide. Some commentators highlighted national security worries: the original rationale for restricting these chips was precisely that China's access to top-tier AI hardware could create a security risk. If a firm can simply pay to get around restrictions, critics argue, that undermines the policy's intent.

Others were focused on the economic precedent and competitive implications. If the U.S. government chooses which companies can sell into China by exacting fees, that may advantage incumbent giants while squeezing smaller enterprises that cannot shoulder such charges. Some observers compared it to a mafia-style shakedown at a sovereign level; others welcomed the predictability investors crave.

NVIDIA’s product story: beyond chips

While headlines fixated on export licenses, NVIDIA keeps working on product innovation. The company has expanded its Cosmos family of world models with Cosmos Reason, a 7-billion-parameter reasoning vision-language model aimed at data curation, robot planning, and video analytics.

Embodied AI—AI that interacts with the physical world through robots or devices—is less flashy in mainstream coverage but potentially as transformative as LLMs. Cosmos Reason is part of that push. The emergence of more capable world models matters for many domains, including automation of tasks that intersect with HR and recruitment operations, such as candidate screening via video interviews, automated assessment of physical tasks in vocational hiring, or even robotic interactions at hiring events.

Microsoft folds GitHub into its core AI team — why that matters

In parallel with the hardware and policy stories, Microsoft announced a major organizational change: Thomas Dohmke, GitHub’s CEO, is stepping down to found a startup, and GitHub will be folded into Microsoft’s core AI engineering team rather than operating as a separate arm. That team is led by Jay Parikh and sits apart from Microsoft’s consumer AI team.

Why is this important? GitHub Copilot has shifted from a strategic developer outreach tool into a full-blown revenue driver. GitHub’s integration into Microsoft's AI center signals that code-generation and AI-assisted development are central strategic assets for the company—and for enterprise AI overall.

For organizations using AI in recruiting workflows that rely on code assessments, automated take-home tasks, or integration with DevOps toolchains, this change is consequential. Tools that evaluate candidate code increasingly route through the same vendor ecosystem that hosts the developer’s environment, and platform-level decisions at GitHub can ripple through hiring processes.

Anthropic’s rapid revenue growth and coding dominance

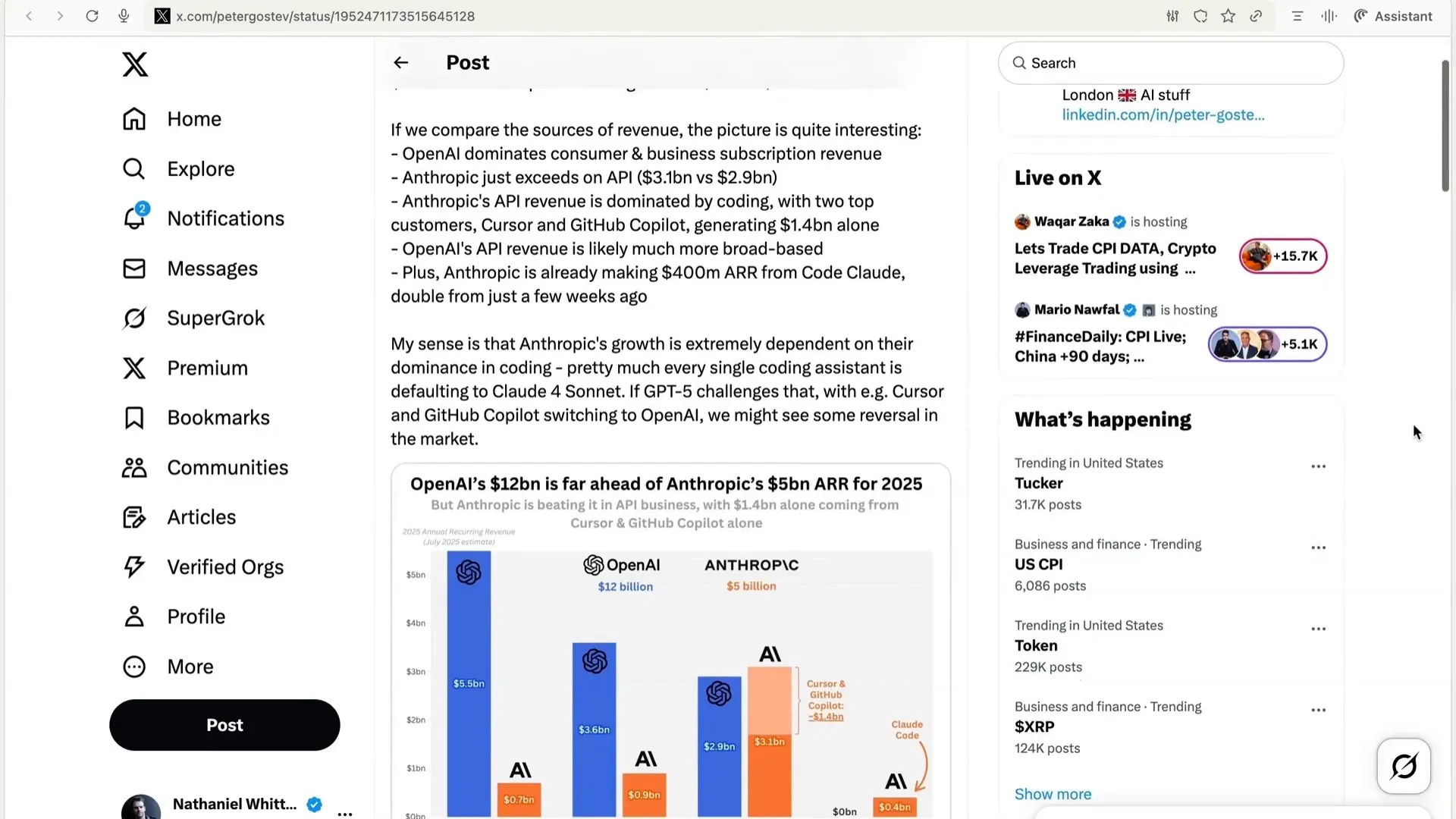

Anthropic recently announced a $5 billion annualized revenue run rate, largely driven by the adoption of its Cloud Four models for coding. Of that $5 billion, $3.1 billion reportedly came from API revenue—and about half of that API revenue came from two customers: Cursor and GitHub Copilot. In short, Anthropic’s current growth is tightly bound to the coding vertical.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

This concentration poses both opportunities and risks. If major coding platforms switch their default model providers—for instance, moving from Anthropic’s Claude to OpenAI’s GPT offerings—that could rapidly reshape the competitive landscape and the economics of tools used across developer hiring and evaluation.

Pricing, competition, and the tech stack for HR teams

Price reductions and aggressive strategies—like those tied to GPT-5’s competitive pricing—are aimed squarely at Anthropic and its coding foothold. For recruiters and hiring managers, pricing and model choice influence product availability and feature sets in candidate-facing tools. If a coding-assessment provider switches its underlying model, the performance, latency, and cost-per-assessment can change overnight.

That matters to HR teams for a few reasons:

- Operational cost: If a recruitment platform’s underlying model becomes more expensive, that cost can be passed along to employers or candidates, or the platform may throttle usage.

- Assessment quality: Different models have different strengths in code generation, reasoning, and error detection; a switch can alter the fairness and predictive validity of coding tests.

- Vendor lock-in and resilience: Heavy reliance on a single provider creates concentration risk—both a commercial risk and a policy risk if export controls or regulation shift access to certain models or chips.

AI in recruiting and the geopolitics of compute

Here is where the chip-export story intersects directly with hiring: modern AI systems—whether they power code assessment platforms, candidate-sourcing tools, or automated interview scoring—depend on compute, data, and model providers. If access to top-tier chips or cloud instances is affected by export policy or fees, the performance and cost structure of recruitment technologies can shift. That has real consequences for organizations trying to build or buy assessment pipelines that are fair, scalable, and affordable.

Put simply: supply-chain and geopolitical decisions about chips and AI infrastructure can cascade into the everyday software stack used for AI in recruiting.

That means talent teams need to think beyond feature lists and ask more strategic questions about vendor resilience, model provenance, and where the underlying compute comes from. Will the provider be subject to the same sovereign-level decisions? Could a future policy change make a popular assessment tool suddenly more expensive or unavailable in certain markets?

Practical guidance for recruiters and HR leaders

Whether you’re building in-house screening tools or buying off-the-shelf assessment platforms, here are practical steps to reduce risk:

- Ask vendors about model and infrastructure provenance. Which providers power the product? What are the fallback options?

- Check cost sensitivity. If a provider’s pricing changes, what’s the business impact on your volume of assessments or interviews?

- Design for fairness and explainability. Models optimized for coding or interview scoring should be validated for bias and predictive validity across different candidate populations.

- Plan for multimodel strategies. Where feasible, enable fallbacks so you’re not dependent on a single model or cloud provider.

- Monitor geopolitical developments. Export controls, licensing structures, and national policy changes can reshape the vendor landscape quickly.

Each of these steps helps HR teams navigate the emerging reality where policy, chip access, and platform economics intersect with the tools used for AI in recruiting.

Where we go from here

The NVIDIA/AMD deal—if it becomes a playbook—could normalize a new variety of sovereign-commercial bargaining over access to markets and technology. That could make costs more predictable for investors, but it could also entrench incumbents and create risks for smaller firms. For anyone building or buying recruitment technology, the lesson is clear: the AI stack is not just a software decision. It’s a geopolitical and supply-chain decision too.

As AI tools proliferate into hiring—from automated résumé screening to coding assessment and video-based interview analytics—practitioners should treat the underlying compute, model provenance, and vendor concentration as first-order risks. Put another way, the same forces that shaped this chip-export negotiation will shape the tools that increasingly define modern hiring. The practical implications for teams using AI in recruiting are immediate: think resilience, transparency, and fairness as part of your procurement and vendor-management processes.

Final thoughts

We’re in an era where policy, product, and platform strategy all collide. From the reported 15% revenue concession to the U.S. government to the reorganization of GitHub inside Microsoft and Anthropic’s revenue concentration in coding, the storylines converge around one idea: control of compute and models translates into strategic advantage. For HR and talent leaders, that control influences the tools you use day to day for AI in recruiting.

If you found this analysis helpful, keep watching the space, ask vendors hard questions, and design your hiring systems with an eye toward both technological performance and geopolitical resilience. The decisions you make now about model selection, vendor diversity, and assessment validation will determine how robust and fair your hiring practices remain as the AI landscape continues to evolve.