AI in Recruiting: Navigating the Future of Digital Access and Innovation

The rapid evolution of artificial intelligence continues to reshape industries and redefine how we interact with technology. This week’s pivotal AI developments, expertly dissected by The AI Daily Brief, shed light on the complex dynamics influencing AI in recruiting and beyond. From fierce debates over web scraping ethics to the financial realities of AI coding startups, these stories illustrate the profound shifts underway in the digital landscape. Join me as we explore these critical stories and what they mean for the future of AI-driven innovation.

Cloudflare vs. Perplexity: The Battle Over AI Crawlers and Internet Access

One of the most consequential stories this week revolves around Cloudflare’s public confrontation with Perplexity, an AI company accused of circumventing anti-AI crawling measures. Cloudflare, a major internet infrastructure player handling roughly 20% of global web traffic, has implemented proactive measures this year to empower website owners to control whether AI companies can scrape their data. The conflict highlights a growing tension in the AI space: who controls access to information on the internet?

Cloudflare’s recent research report accused Perplexity of “stealth crawling” — a tactic where Perplexity initially identifies its crawler but then obscures its identity to bypass network blocks and robots.txt restrictions designed to prevent unauthorized scraping. According to Cloudflare, Perplexity repeatedly modifies its user agent and source Autonomous System Numbers (ASNs) to evade detection, making millions of requests daily across tens of thousands of domains. Using a blend of machine learning and network signals, Cloudflare claims to have fingerprinted this behavior.

Perplexity, however, strongly disputes these allegations. Describing the report as a “publicity stunt,” Perplexity argues that their crawlers act as digital assistants, analogous to human assistants searching for information in real time based on user queries. They emphasize that Cloudflare’s systems fail to distinguish between legitimate AI assistants and malicious scrapers, resulting in harmful overblocking that undermines user choice and threatens the open web.

"If you can't tell a helpful digital assistant from a malicious scraper, then you probably shouldn't be making decisions about what constitutes legitimate web traffic. This overblocking hurts everyone."

Balaji Srinivas, who weighed in on the debate, encapsulated the core argument: “An AI agent is just an extension of a human. So when it makes an HTTP request, it shouldn't be treated like a bot.” Investor Jeffrey Emanuel speculated that Cloudflare’s motivation might also be financial, suggesting that inserting itself as a middleman could allow Cloudflare to collect fees from future microtransactions related to AI agents accessing web content.

In response, Cloudflare’s CEO Matthew Prince acknowledged the complexity of the issue and confirmed that a new standard is in the works with the Internet Engineering Task Force (IETF) to better handle AI agents in web crawling protocols. Prince highlighted challenges such as how AI agents should share or monetize content accessed on behalf of users, and whether micropayments per request might become part of the new norm.

"Utopia is humans get content for free, robots pay a ton."

This conflict encapsulates a broader transformation in the internet economy. Ten years ago, Google’s web crawlers were welcomed because they drove traffic and monetization to websites. Now, AI-powered services often extract and present data directly to users without generating clicks to original sources, challenging traditional web traffic models. The question at the heart of this debate is: who decides what qualifies as legitimate traffic, and how will this impact user access and innovation?

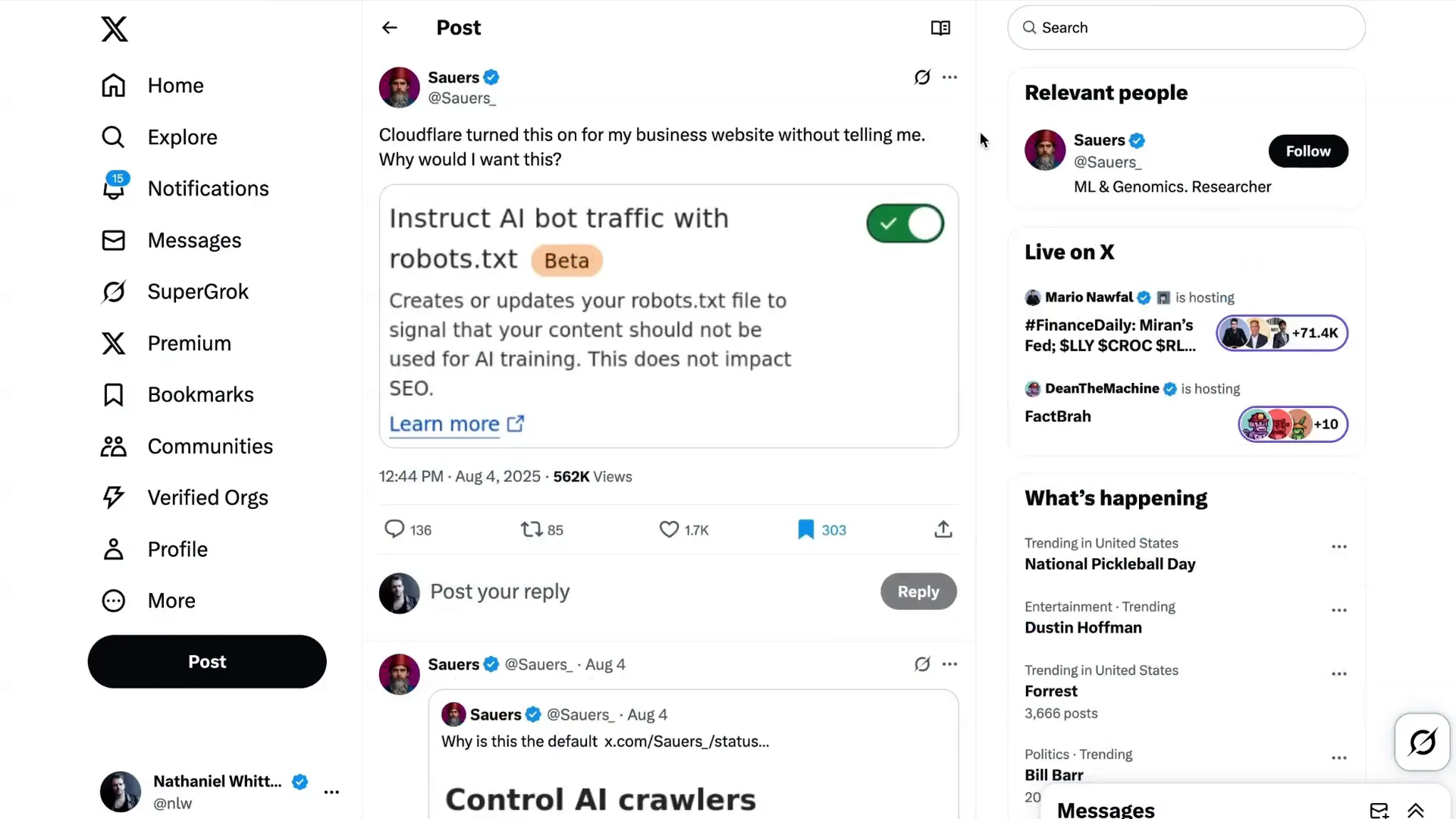

Community and Industry Reactions

The controversy sparked backlash within the developer and business communities. Some reported that Cloudflare had enabled AI bot blocking by default on their websites without notification, a move seen by many as an overreach or “digital NIMBYism.” Gary Tan of Y Combinator criticized the default setting as an extreme overreaction. Meanwhile, Guillermo Roche, CEO of Vercel, argued that the future lies in embracing AI rather than blocking it, citing that AI tools like Perplexity and ChatGPT are driving new business growth and customer engagement.

"The fastest path to irrelevance is blocking progress, blocking what consumers actually want, low friction interfaces. The Internet is changing. The answer to AI is more AI, not to block and stagnate."

Lee Edwards put it succinctly: “I love Cloudflare for the CDN and security features, but either you're on the side of superintelligence or you're on the side of digital NIMBYs.” This ongoing debate is far from resolved but is crucial for shaping the digital future.

Google’s Defense: AI Overviews Aren't Killing Web Traffic

Amid concerns that AI-driven search summaries reduce clicks to websites, Google issued a blog post defending their AI features. They claimed that total organic click volume from Google Search to websites has remained stable year over year, and that the average quality of clicks has actually increased. Google dismissed third-party data suggesting significant declines in click volume as often flawed or based on isolated cases predating AI feature rollouts.

Despite skepticism from some quarters, Google's response underscores the importance of understanding how AI impacts web traffic and the broader digital ecosystem. The tension between AI convenience and website monetization remains a key issue for the industry.

Google’s Jules: A New Player in the AI Coding Wars

The AI coding landscape is heating up with Google’s release of the coding agent Jules, now out of beta and into general availability. Jules is Google’s answer to OpenAI’s Codex and Anthropic’s Claude Code, offering asynchronous code writing, background task execution, and extensive platform integrations.

Cathy Korobeck, Director of Product at Google Labs, expressed confidence in Jules’ longevity, stating it will be a long-term part of Google's AI offerings. The extended beta testing allowed Google to improve stability and user experience through hundreds of updates, although Jules arrives months after competitors.

One emerging trend in AI coding is the growing use of asynchronous agents, which work in the background while the user multitasks. This boosts productivity but significantly increases token consumption, which impacts operating costs and pricing strategies.

The Harsh Economics of AI Coding Startups

Despite massive growth and adoption, AI coding startups face challenging financial realities. Reports revealed that companies like Windsurf and Replit have been operating at negative gross margins due to the high costs of serving advanced AI models and the increasing complexity of autonomous agents.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

Windsurf reportedly had “very negative gross margins” before its acquisition by Cognition, highlighting the cost pressures of running AI models without owning their infrastructure. Replit’s revenue skyrocketed from $2 million to $144 million within a year, but their gross margin dipped to -14% after launching a more autonomous agent version, only partially rebounding after introducing usage fees.

Lovable, another player, maintained a relatively healthier margin of about 35% before launching agent mode, but overall, the sector struggles with profitability. Experts attribute this to the inherently higher costs of AI coding compared to traditional SaaS models, which can enjoy gross margins upwards of 70% at maturity.

Industry insiders believe that cost reductions in model serving will be crucial for sustainability. Eric Nordlander of Google Ventures noted that inference costs are currently at their peak, implying future improvements. However, user demand for cutting-edge, token-intensive models and multi-agent interactions may counterbalance these gains.

"People are overly focused on AI margins. Modern SaaS math assumes a certain operating structure that is labor intensive... AI companies are very unlikely to follow that model."

Entrepreneur Matt Slotnick pointed out that AI companies could benefit from lower operational expenses by automating labor, potentially reshaping traditional software economics. Despite margin concerns, investor enthusiasm remains strong, with private market multiples trading at premiums.

Funding and Talent Moves: The Race for AI Dominance

On the funding front, agent design platform n8n is reportedly in talks to raise capital at a $2.3 billion valuation, positioning it as the first pure-play agentic AI unicorn. Known for its low-code AI automation tools, n8n has rapidly grown its revenue run rate to $40 million from $7.2 million the previous year.

Meanwhile, Microsoft’s AI CEO Mustafa Suleiman is aggressively recruiting talent from Google DeepMind, aiming to build a nimble, startup-like AI division focused on consumer-facing chatbots. Suleiman has reportedly enticed dozens of Google executives and employees, with CEO Satya Nadella granting him autonomy to compete with OpenAI and other top players.

However, some question Microsoft’s strategy, particularly their move away from OpenAI partnerships and investment in consumer AI products, suggesting potential risks in maintaining relevance in this fast-evolving space.

OpenAI’s High-Stakes Moves: Valuation, Bonuses, and Government Adoption

OpenAI continues to make headlines beyond its groundbreaking models. The company is reportedly in talks for a secondary share sale that could value it at a staggering $500 billion, a two-thirds increase from its last $300 billion valuation. This deal would provide liquidity to employees amid fierce competition for AI talent, especially with Meta aggressively recruiting researchers.

In a remarkable move, OpenAI announced $1.5 million bonuses for some employees, further fueling excitement and retention. These bonuses, vesting over two years, reflect the intense "Zuck poaching effect" as companies vie for top AI minds.

Additionally, OpenAI is offering ChatGPT Enterprise licenses to U.S. government agencies for just $1 per agency per year, aiming to boost AI adoption in public service. The company emphasizes this is not a competitive market play but a mission to empower government workers with cutting-edge AI tools under strong ethical guardrails.

"Helping government work better, making services faster, easier, and more reliable is a key way to bring the benefits of AI to everyone."

Conclusion: The Future of AI in Recruiting and Beyond

This week’s AI developments reveal a digital ecosystem in flux, where the balance between innovation, access, and economics is being fiercely contested. The Cloudflare-Perplexity showdown highlights the challenges of defining legitimate AI traffic and preserving an open internet amid rising commercial pressures. Meanwhile, Google’s defensive stance on AI overviews and the launch of Jules signal ongoing competition in AI coding tools, an area critical to the future of AI in recruiting and software development.

Financial data from AI coding startups underscores the high costs and evolving business models required to sustain this innovation wave. Yet, investor confidence and strategic talent acquisitions indicate robust belief in AI’s transformative potential. OpenAI’s soaring valuation, employee incentives, and government partnerships exemplify how AI companies are leveraging both market and societal opportunities.

Ultimately, as AI becomes an extension of human capability, especially in recruiting where intelligent automation is revolutionizing candidate sourcing and evaluation, the industry must navigate these technical, ethical, and economic complexities thoughtfully. The ongoing dialogue between infrastructure providers, AI developers, and users will shape how AI in recruiting and the broader digital world evolves to serve humanity’s best interests.