How We Build Effective Agents: Insights on AI in Recruiting and Beyond

In today's rapidly evolving landscape of artificial intelligence, the concept of agents—autonomous systems capable of making decisions and taking actions—has become a focal point for innovation. Barry Zhang from Anthropic shares invaluable insights into how we build effective agents, diving deep into practical strategies and considerations that can help organizations harness AI in recruiting and other complex domains. Drawing from his extensive experience, including his role as a tech lead at Meta and his current work at Anthropic, Barry outlines three core principles that every AI builder should keep in mind: don't build agents for everything, keep it simple, and think like your agents.

Whether you’re exploring AI in recruiting or developing other agentic systems, understanding these foundational ideas will empower you to create more capable, reliable, and scalable AI solutions. Let’s unpack Barry's approach and explore how these principles can transform the way we build agents today.

From Simple Models to Complex Agents: The Evolution of AI Systems

The journey to building effective agents often begins with simple AI features—tasks like summarization, classification, and extraction. These were once considered magical feats just a few years ago but have now become basic expectations in many AI products.

However, as the demand for smarter AI solutions grew, developers encountered limitations with single-model calls. One model call often wasn’t enough to handle the complexity of real-world tasks. This led to the orchestration of multiple model calls arranged in predefined control flows, known as workflows. Workflows allowed teams to balance cost and latency against performance, leading to more sophisticated but still controlled AI systems.

Today, we are witnessing the rise of true agents—systems that go beyond fixed workflows to make decisions independently, guided by feedback from their environments. These agents operate with a degree of autonomy, deciding their own trajectories rather than following rigid paths. This marks a significant leap forward in AI capabilities.

Looking ahead, the future of agentic systems is still unfolding. Single agents may become more general-purpose and capable, or we might see the emergence of multi-agent collaborations where multiple agents delegate tasks and work together seamlessly. The key trend is clear: as we grant AI systems more agency, they become more useful but also introduce higher costs, increased latency, and greater risks associated with errors.

Don’t Build Agents for Everything: Choosing the Right Use Cases

One of the most important lessons Barry emphasizes is that agents are not a universal solution. They are best suited for scaling complex and valuable tasks—problems that are ambiguous and difficult to map out explicitly.

Why avoid building agents for every use case?

- Cost and Complexity: Agents exploring ambiguous problem spaces consume a lot of tokens, which translates to higher operational costs.

- Control: For tasks with clear decision trees, explicitly coding workflows is more cost-effective and provides greater control.

- Risk Management: High-stakes tasks where errors are difficult to detect require cautious deployment and often limited agent autonomy.

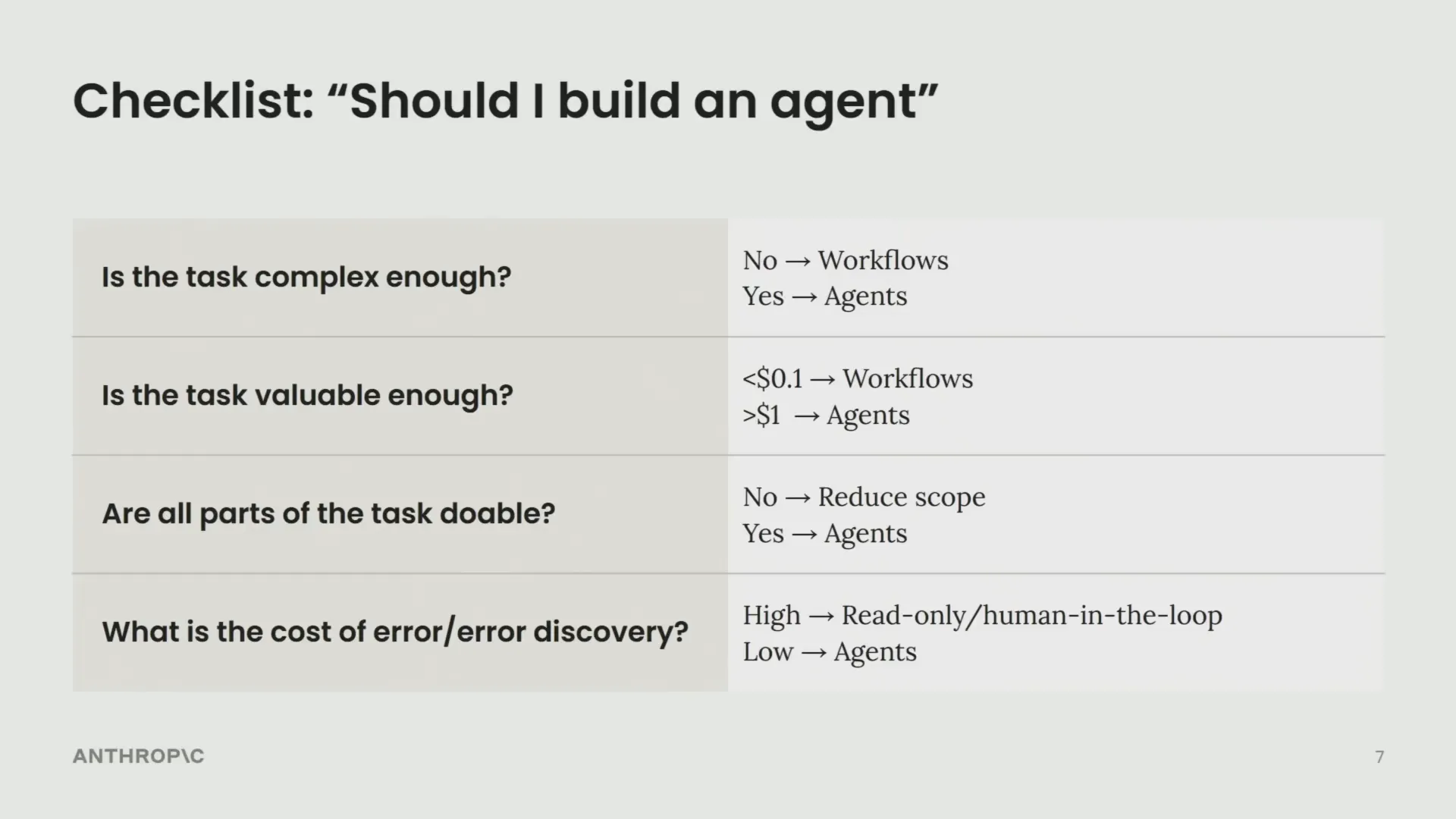

Barry provides a practical checklist to help determine when building an agent makes sense:

- Task Complexity: Does the task involve ambiguity that can’t be easily mapped out? If yes, agents may be appropriate.

- Task Value: Does the value of the task justify the cost of exploration and token usage? High-value tasks like coding or complex problem solving fit well.

- Critical Capabilities: Can the agent reliably perform essential functions without bottlenecks? For example, a coding agent must write, debug, and recover from errors effectively.

- Error Consequences: Are errors manageable and discoverable? If not, consider limiting the agent’s scope or adding human oversight.

For instance, AI in recruiting often involves high volumes of relatively straightforward decisions, such as screening resumes or answering common candidate queries. In such scenarios, workflows might be more efficient and cost-effective than fully autonomous agents.

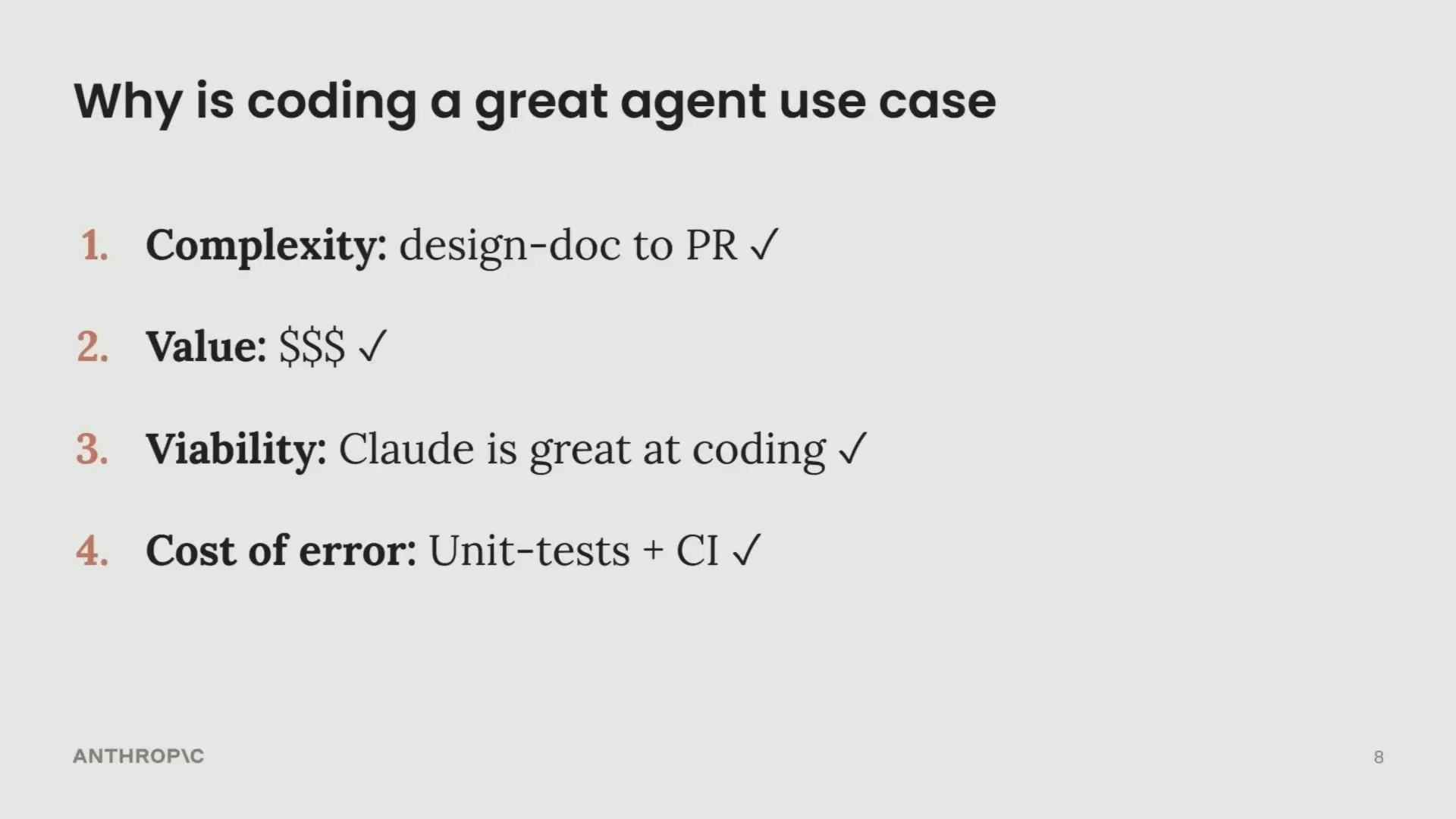

Conversely, in a coding context—a prime example Barry highlights—the task is inherently ambiguous and complex. The value of good code is high, and the output can be verified through unit tests and continuous integration, making it an ideal candidate for agent deployment.

Keep It Simple: The Backbone of Effective Agents

Once you’ve identified a suitable use case for an agent, the next principle is to keep the system as simple as possible, especially during early iterations. Complexity upfront can kill iteration speed and slow down progress.

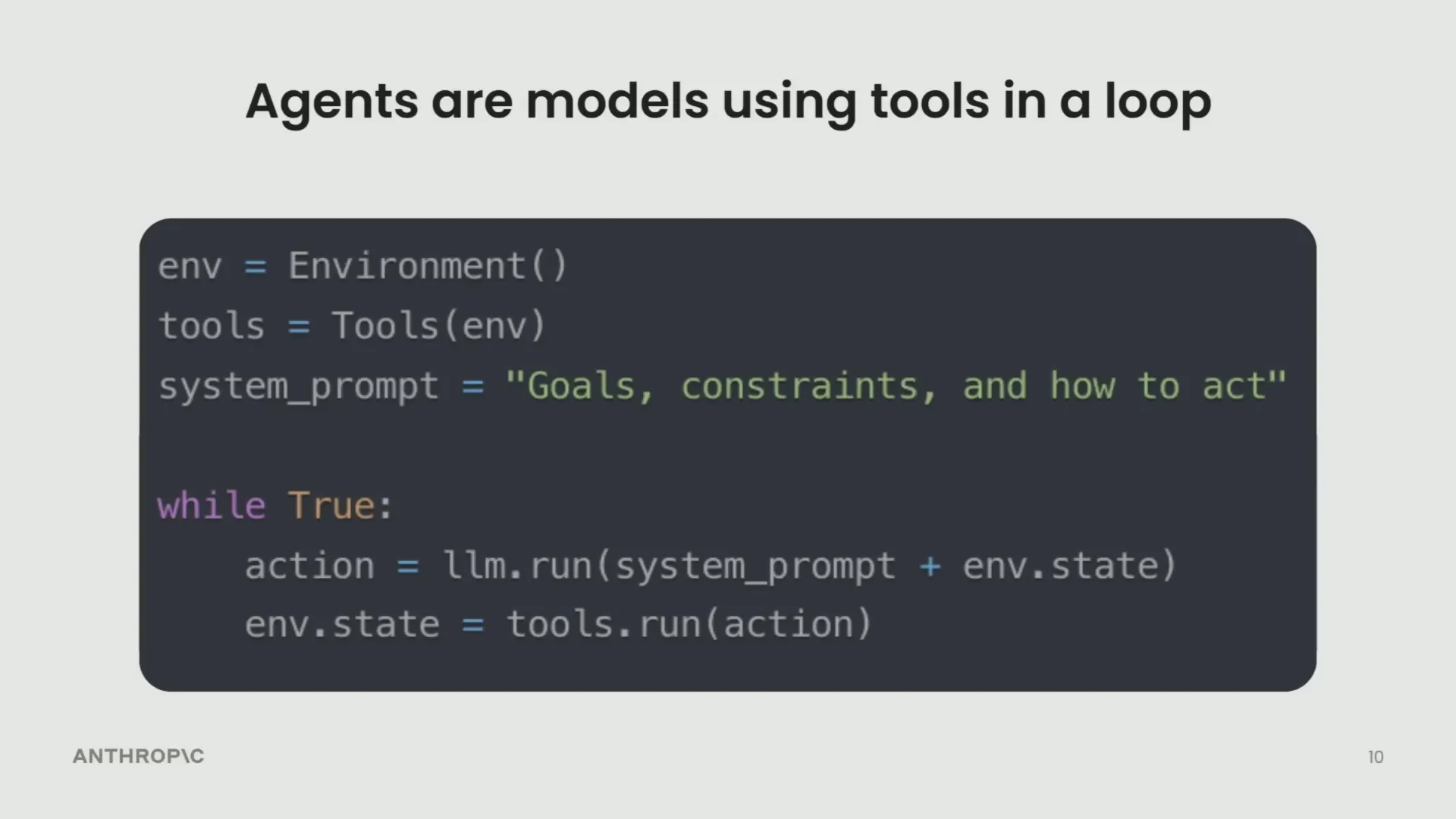

Barry breaks down an agent into three fundamental components:

- The Environment: The system or context in which the agent operates.

- Tools: Interfaces or mechanisms that the agent uses to take actions and receive feedback.

- The System Prompt: Instructions defining the agent’s goals, constraints, and ideal behaviors.

Agents function by looping through these three components—taking actions with tools, observing the environment, and refining their behavior based on the system prompt. This loop is the core of agentic behavior.

Barry stresses that optimizing these foundational elements first yields the highest return on investment. Once a solid behavior is established, you can then explore optimizations such as:

- Caching agent trajectories to reduce token costs

- Parallelizing tool calls to reduce latency, especially in search applications

- Designing user interfaces that clearly communicate the agent's progress to build trust

Interestingly, Barry points out that diverse agent use cases—whether for coding, search, or computer interaction—often share almost the same underlying codebase. The key differences lie in the environment setup, the tools provided, and the system prompt used to guide the agent.

For builders interested in tools, Barry recommends attending workshops like the Model Context Protocol (MCP) session by his colleague Mahesh, which dives into how tools interface with agents effectively.

Think Like Your Agents: Bridging the Human-Agent Perspective Gap

One of the most insightful pieces of advice Barry offers is to adopt the perspective of your agent. Many developers build agents based on their own human intuition, which can lead to confusion when agents behave unexpectedly or make mistakes that seem illogical to humans.

Agents operate within strict limitations—they process a limited context window (typically 10,000 to 20,000 tokens) and make decisions based on the information available in that context. Understanding what the agent "sees" and "knows" at each step helps bridge the gap between human expectations and agent behavior.

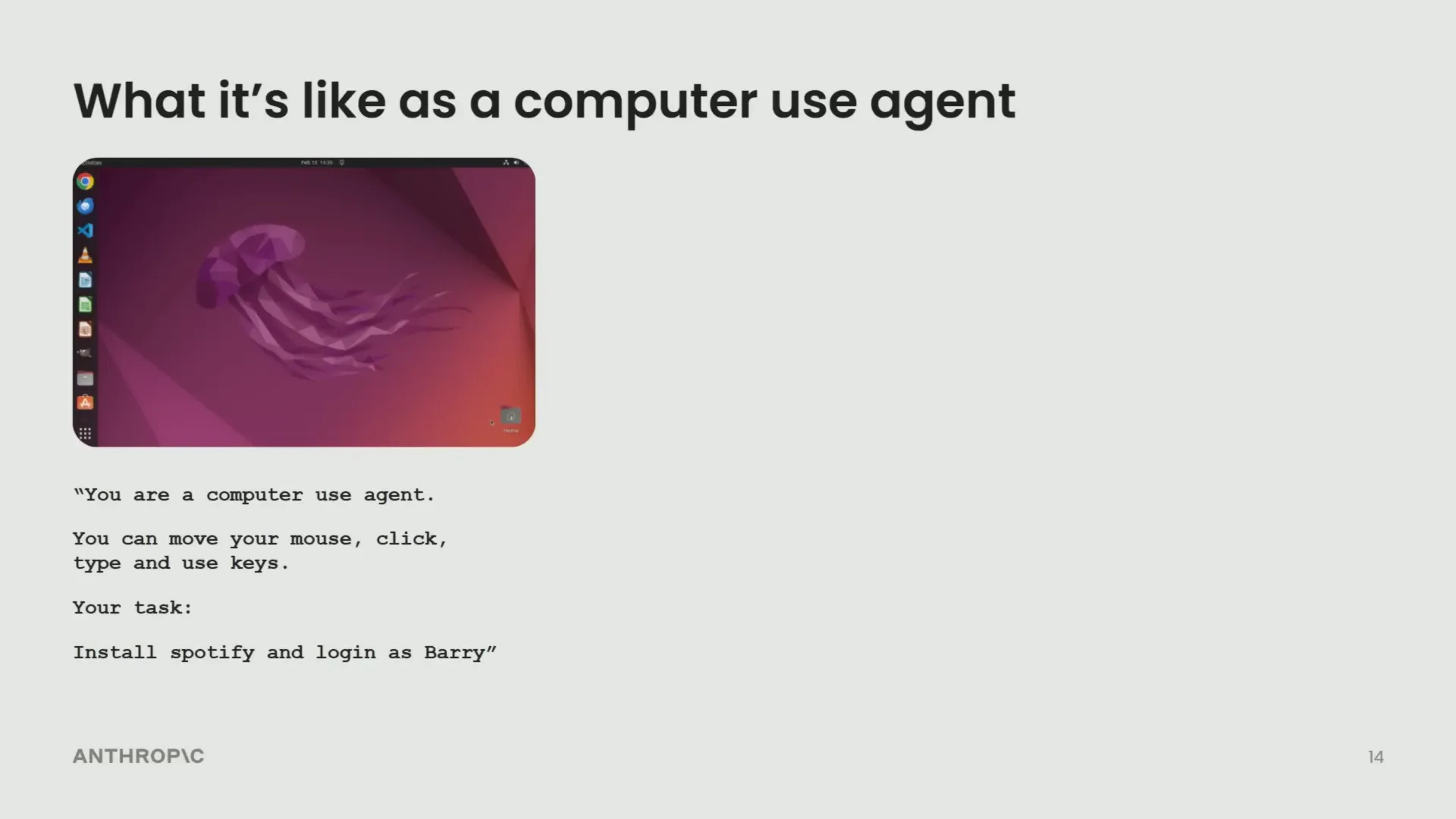

Barry illustrates this with a thought experiment: imagine being a computer user agent yourself. You receive a static screenshot and a brief, often inadequate description of your task. Your actions are limited to tools you can invoke, and while executing an action, you are effectively "blind" to what happens until the next snapshot arrives. This situation forces you to make decisions with incomplete information, akin to working in the dark.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

This limited feedback loop means agents operate with a degree of uncertainty and must take leaps of faith when acting. Experiencing this firsthand—even hypothetically—can reveal what agents truly need to function effectively, such as:

- Precise knowledge of screen resolution or environment parameters to perform accurate actions

- Recommended actions and guardrails to reduce unnecessary exploration and keep the agent focused

Barry encourages builders to perform this exercise with their own agents to identify what contextual information is crucial for their success. Fortunately, modern systems like Claude allow developers to "ask the agent about itself"—testing whether the agent understands instructions, tool capabilities, or decisions made during its trajectory. This introspective approach can uncover ambiguities and areas for improvement.

For example, you can feed the entire agent trajectory into Claude and ask questions like:

"Why did you make this decision here? Is there anything that would help you make better decisions?"

This method complements, but does not replace, a developer’s own understanding of the system, offering a closer look at how agents perceive and reason about their environment.

Personal Musings: The Future of Agentic Systems

After covering practical advice, Barry shares some personal reflections on the future challenges and opportunities in building agents, particularly relevant for AI in recruiting and other enterprise applications.

1. Budget Awareness in Agents

Unlike workflows, where cost and latency are more predictable, agents currently lack robust mechanisms to control these factors. Barry believes that making agents more budget-aware—able to manage constraints related to time, money, and token usage—is critical to enabling broader deployment in production environments.

Open questions include:

- How to define budgets effectively for agent operation?

- What are the best enforcement mechanisms to keep agents within these budgets?

2. Self-Evolving Tools

Barry hints at an exciting frontier where agents not only use tools but can also design and improve their own tools—a meta-tool concept. This capability would make agents more adaptable and general-purpose, tailoring their toolsets to specific use cases dynamically.

3. Multi-Agent Collaboration

Barry is convinced that multi-agent systems—where multiple specialized agents collaborate and delegate tasks—will become common in production by the end of the year. These systems offer benefits like parallelization and better management of context windows through sub-agents.

However, a significant open question remains: how do these agents communicate effectively? Presently, most systems rely on synchronous user-assistant interactions, but the future likely lies in asynchronous communication with richer role definitions and recognition mechanisms among agents.

Barry invites fellow AI engineers to explore these questions together, emphasizing the collaborative nature of advancing agentic systems.

Bringing It All Together: Key Takeaways for Building Effective Agents

To summarize Barry’s insights, here are the three essential principles for building effective agents:

- Don’t build agents for everything. Use them strategically for complex, valuable tasks that justify their cost and complexity.

- Keep it as simple as possible. Focus first on the core components—environment, tools, and system prompt—and iterate rapidly before optimizing.

- Think like your agents. Understand their limited perspective and context to better align your expectations and improve their design.

By following these guidelines, developers can create agents that are not only more capable but also more trustworthy and cost-effective—paving the way for practical applications like AI in recruiting, coding assistance, and beyond.

Barry’s journey, from pioneering AI products at Meta to his current work at Anthropic, underscores the importance of practicality in AI engineering. His approach blends deep technical knowledge with a clear focus on making AI genuinely useful in the real world.

Conclusion

Building effective agents is a nuanced challenge that demands careful consideration of task complexity, value, and operational constraints. By not overusing agents, keeping systems simple, and empathizing with the agent’s perspective, AI builders can unlock powerful new capabilities that enhance productivity and innovation.

Whether you’re leveraging AI in recruiting to streamline hiring processes or developing agents for other domains, the principles Barry Zhang shares serve as a valuable compass. As the field advances, embracing budget awareness, self-evolving tools, and multi-agent collaboration will be key to pushing the boundaries of what agents can achieve.

Let’s keep building smarter, more effective agents that truly make a difference.