AI in Recruiting: Building Useful General Intelligence with Amazon's NovaAct

In the rapidly evolving world of artificial intelligence, the quest for agents that can think, learn, and act with human-like reliability remains a pinnacle challenge. Danielle Perszyk, a cognitive scientist at the Amazon AGI San Francisco Lab, offers an insightful perspective on this journey toward what she calls "Useful General Intelligence." Her groundbreaking work with NovaAct, an agentic model and SDK designed to interact with computer interfaces as humans do, is reshaping how we think about AI and its role in augmenting human intelligence. This article delves into her vision, the science behind it, and the exciting potential for AI in recruiting and beyond.

Understanding the Challenge: Our Minds and AI Agents Are Both Hallucinating

Danielle opens with a provocative reminder: we are all hallucinating right now. Our brains don't have direct access to reality; they operate by making predictions based on internal world models, processing sensory input, and reconciling discrepancies between the two. Neuroscientists call this process "controlled hallucination," emphasizing that our perception is a sophisticated prediction mechanism rather than a perfect reflection of the outside world.

This analogy extends to AI today. Modern chatbots and language models generate content, brainstorm ideas, and even produce images, but they still "hallucinate" — they can invent plausible but incorrect or unreliable information. Unlike our brains, which have evolved mechanisms to keep hallucinations under control, AI agents are not yet capable of thinking, learning, or acting in a reliable, general-purpose manner.

Hallucinations in AI are not flaws but necessary features that enable flexibility and creativity. The key is learning how to control these hallucinations effectively. This understanding sets the foundation for building AI that can complement human intelligence rather than replace it.

Reframing Artificial General Intelligence (AGI): Beyond Smarter Machines

The common narrative around AGI focuses on creating machines that think like humans, often depicted as isolated, all-knowing entities capable of independent thought. Danielle challenges this view by highlighting a fundamental category error: general intelligence does not exist in a vacuum within a single thinking machine.

Instead, intelligence is inherently social and distributed. It emerges through interactions and shared understanding, much like human cognition itself. This perspective shifts the goal from building AI that simply becomes smarter and more autonomous to creating AI that enhances human intelligence and agency.

Danielle stresses that the future of AI involves augmenting humans to be greater than the sum of their parts with AI. This co-evolution with AI agents is not about replacement but collaboration, where agents help us offload mundane tasks and amplify our cognitive capabilities.

Two Historical Perspectives on Intelligence and Technology

To appreciate the vision for useful general intelligence, Danielle revisits two pivotal perspectives in the history of computing and AI:

- The Traditional AI Vision: Originating in 1956, this approach aimed to build machines that think like humans to solve intelligence. Although the original pioneers did not achieve this, their work laid the foundation for AI as we know it today, fueling a feedback loop of technological advances—from powerful computers to the internet and sophisticated algorithms—renewing the quest for AGI.

- Douglas Engelbart’s Vision of Augmentation: Known for inventing the computer mouse and graphical user interface, Engelbart focused not on replacing human intelligence but augmenting it. He envisioned computers as tools to make humans smarter by offloading cognitive tasks and distributing our thinking across digital environments.

This techno-social coevolution—where technology reshapes human cognition and vice versa—is a core principle in Danielle’s approach. AI’s purpose, therefore, is not only to become more intelligent but to enhance human intelligence through seamless collaboration.

Why General-Purpose Agents Matter: Simplifying and Amplifying Human Agency

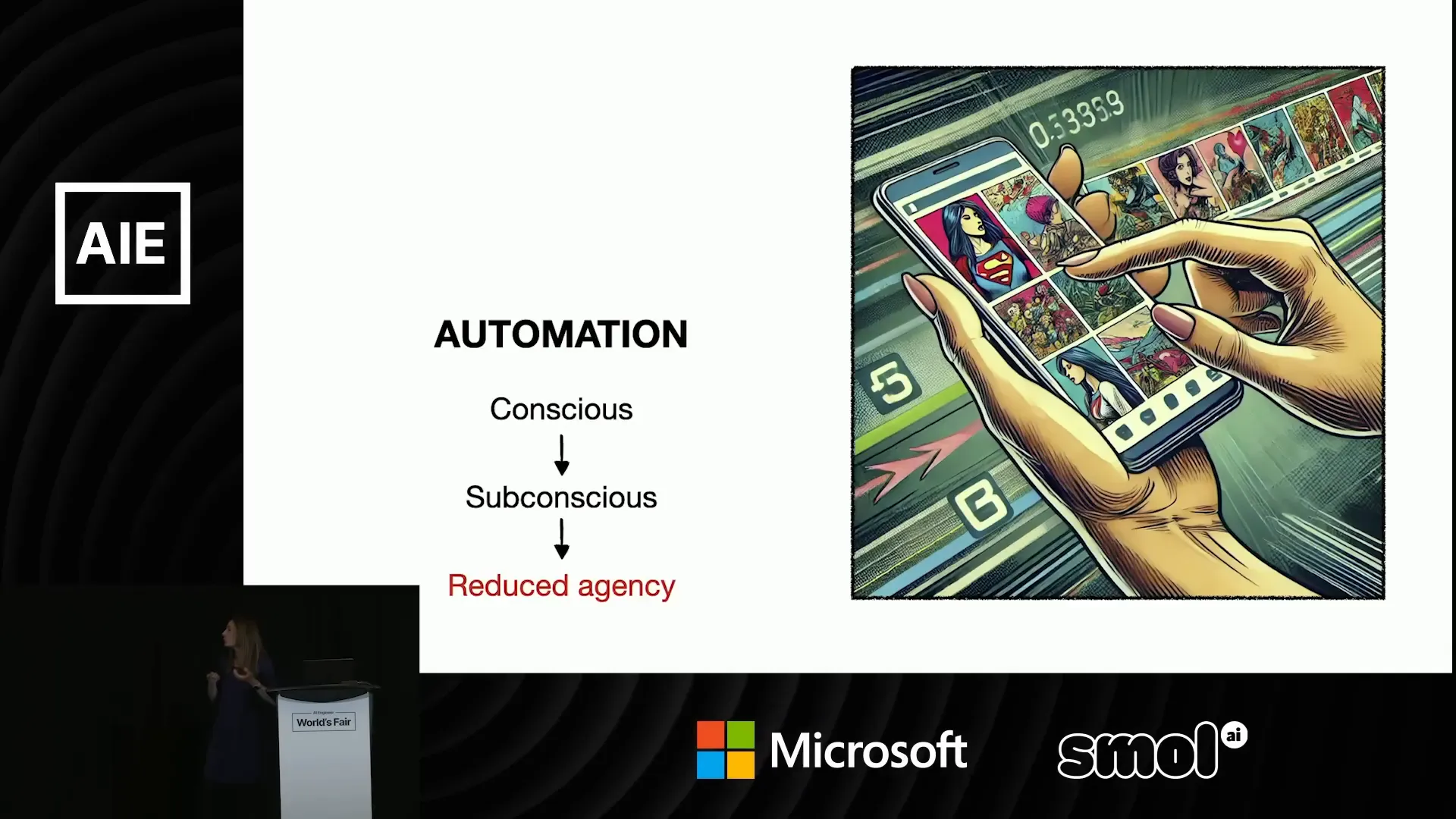

General-purpose AI agents are expected to revolutionize how we work and live by either simplifying our lives or providing leverage to accomplish more. Danielle explains that automation is a natural engine for augmentation. We become experts by automating repetitive tasks, freeing up attention for higher-level thinking and creativity.

However, automation can backfire if not implemented thoughtfully. Endless scrolling, echo chambers, and autocomplete features that stifle original thought are examples of how technology can reduce rather than enhance human agency. The path forward requires precise control and active tailoring of AI systems to human needs, enabling agents to genuinely increase our agency.

At this crossroads, the choice is clear: continue to develop AI that is smarter and more autonomous without regard for human benefit, or build AI that actively un-hobbles humans by making us smarter and more capable.

Introducing NovaAct: The Future of Reliable, Interactive AI Agents

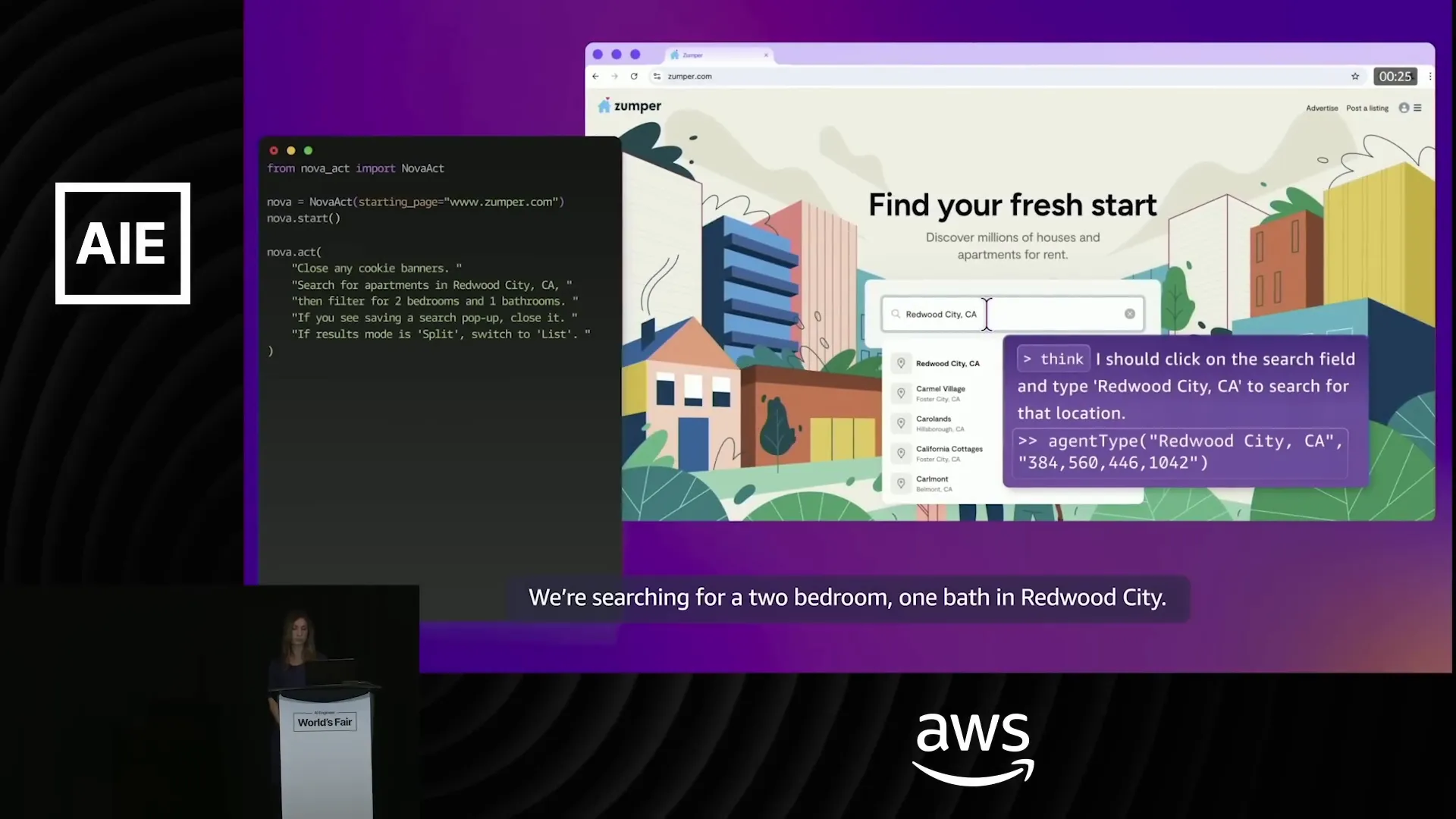

Danielle’s team at Amazon AGI SF Lab is pioneering a new approach to AI agents with NovaAct, a research preview of their general-purpose agent technology. Unlike many current AI systems that function as read-only assistants or code generators, NovaAct is designed to interact with computer user interfaces directly by clicking, typing, and scrolling—just like a human.

This capability is vital because most websites and applications are built for visual interaction rather than API calls. NovaAct leverages Amazon’s foundation model, Nova, fine-tuned for high reliability in UI tasks, allowing it to translate natural language action requests into precise computer interactions.

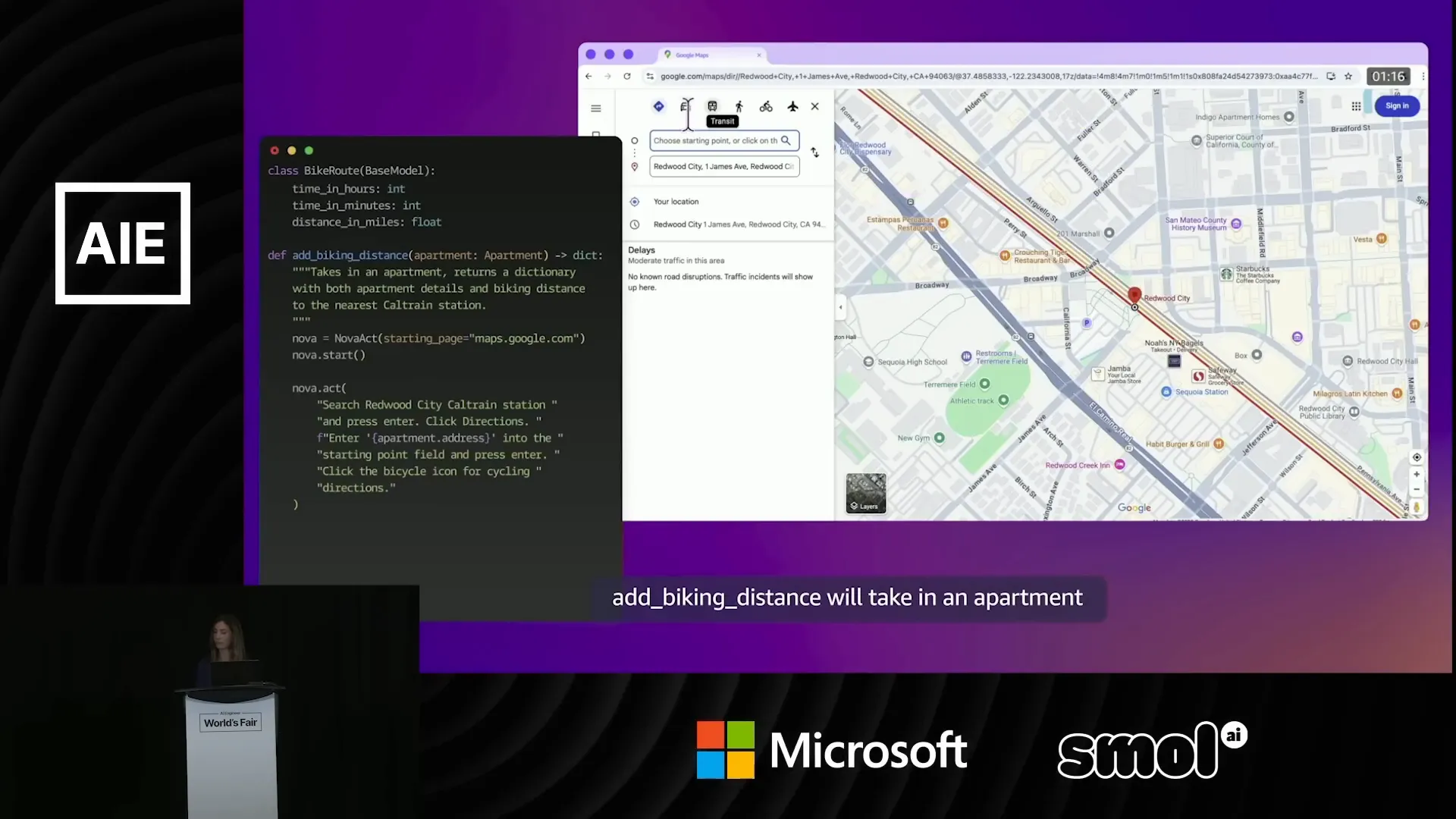

Developers can use NovaAct through a simple SDK that makes it frictionless to build and deploy agents. The atomic unit of interaction is an "act call," which breaks down complex tasks into step-by-step actions, considering outcomes and adapting plans dynamically.

Real-World Application: Finding the Dream Apartment

In a demo, Danielle’s teammate Carolyn shows how NovaAct can search for a two-bedroom, one-bath apartment in Redwood City by interacting with rental websites. The agent interprets the task, navigates the UI, and extracts relevant information, demonstrating a level of reliability and adaptability that current AI agents lack.

Following this, Fjord, another teammate, illustrates how NovaAct integrates with Python to perform more complex workflows, such as calculating biking distances to nearby transit stations for each apartment listing using Google Maps. This integration enables parallel processing and data structuring with just a few lines of code, making it accessible and powerful for developers.

The Complexity of Reliable Computer Use by AI Agents

Interacting with computers might seem trivial to humans, but it poses significant challenges for AI. For example, interpreting icons on a website requires understanding subtle visual cues and contextual information—something humans excel at even when encountering unfamiliar symbols.

Teaching an AI agent every possible icon and interaction is infeasible. Danielle’s team addresses this by enabling agents to explore and learn through reinforcement learning (RL), allowing them to discover novel ways to use computers autonomously.

However, as agents develop unique methods of interaction, it becomes crucial that their perception of the digital environment remains aligned with human understanding. Misaligned agents could cause confusion and reduce trust in AI systems.

Why Current AI Agents Fall Short and How Computer Use Agents Differ

Most existing agents are wrappers around large language models (LLMs) that act as read-only assistants. They can use tools and generate code but lack an embodied environment to ground their interactions. Without a world model that includes visual and interactive context, these agents cannot reliably perform tasks requiring direct manipulation of digital interfaces.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

Computer use agents like NovaAct are different; they perceive pixels and interact with user interfaces, providing an early form of embodiment. This grounding is essential for building agents that truly understand and act upon human intentions.

Danielle’s approach focuses on making the smallest units of interaction—atomic actions—reliable and controllable. By combining these atomic actions, agents can generate complex workflows, much like how words combine to create infinite meanings in language.

The Path Forward: Evolving NovaAct to Enhance Human Intelligence and Agency

Grounding interactions in a shared environment is necessary but not sufficient for building aligned general-purpose agents. To make agents truly reliable and useful, they must understand our higher-level goals and intentions.

Danielle highlights a critical insight from cognitive science and evolutionary biology: general intelligence is social, distributed, and emergent from interactions. It cannot exist solely within isolated individuals or models. This social aspect means that AI agents will need models of human minds to align their behavior effectively.

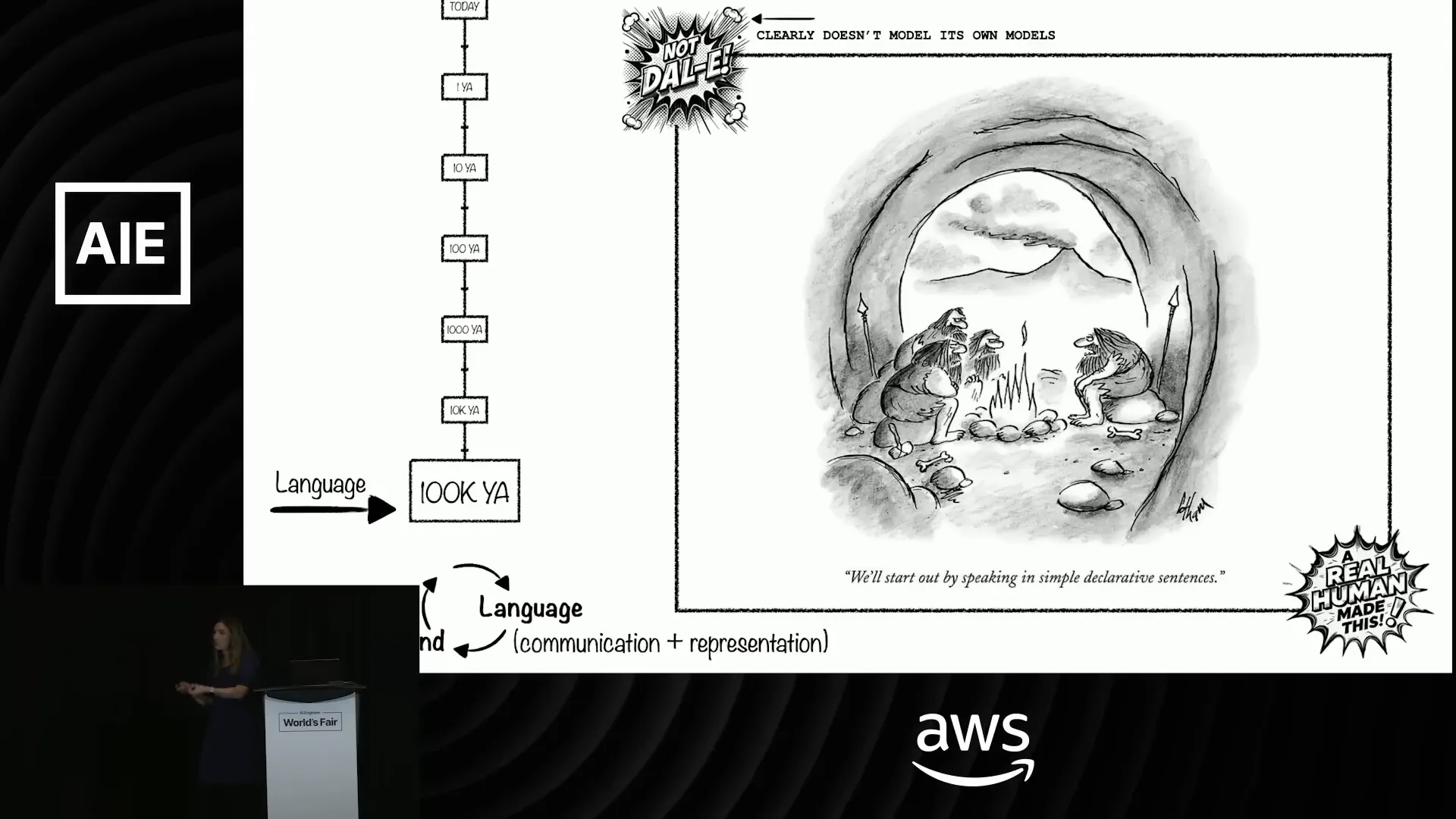

Historically, human intelligence evolved through a feedback loop of social interaction and technological augmentation. Language, in particular, co-evolved with our models of minds, enabling us to represent abstract concepts and communicate effectively.

Language: The Foundation of General Intelligence

Language is unique among communication systems because it inherently models other minds. Unlike programming languages, which are verifiable but lack negotiation of meaning, human language dynamically adjusts and evolves through social interaction.

Large language models, despite their sophistication, do not truly understand language because they lack awareness that words refer to concepts created by minds. Danielle underscores this by saying, "When we ask what's in a word, the answer is quite literally a mind."

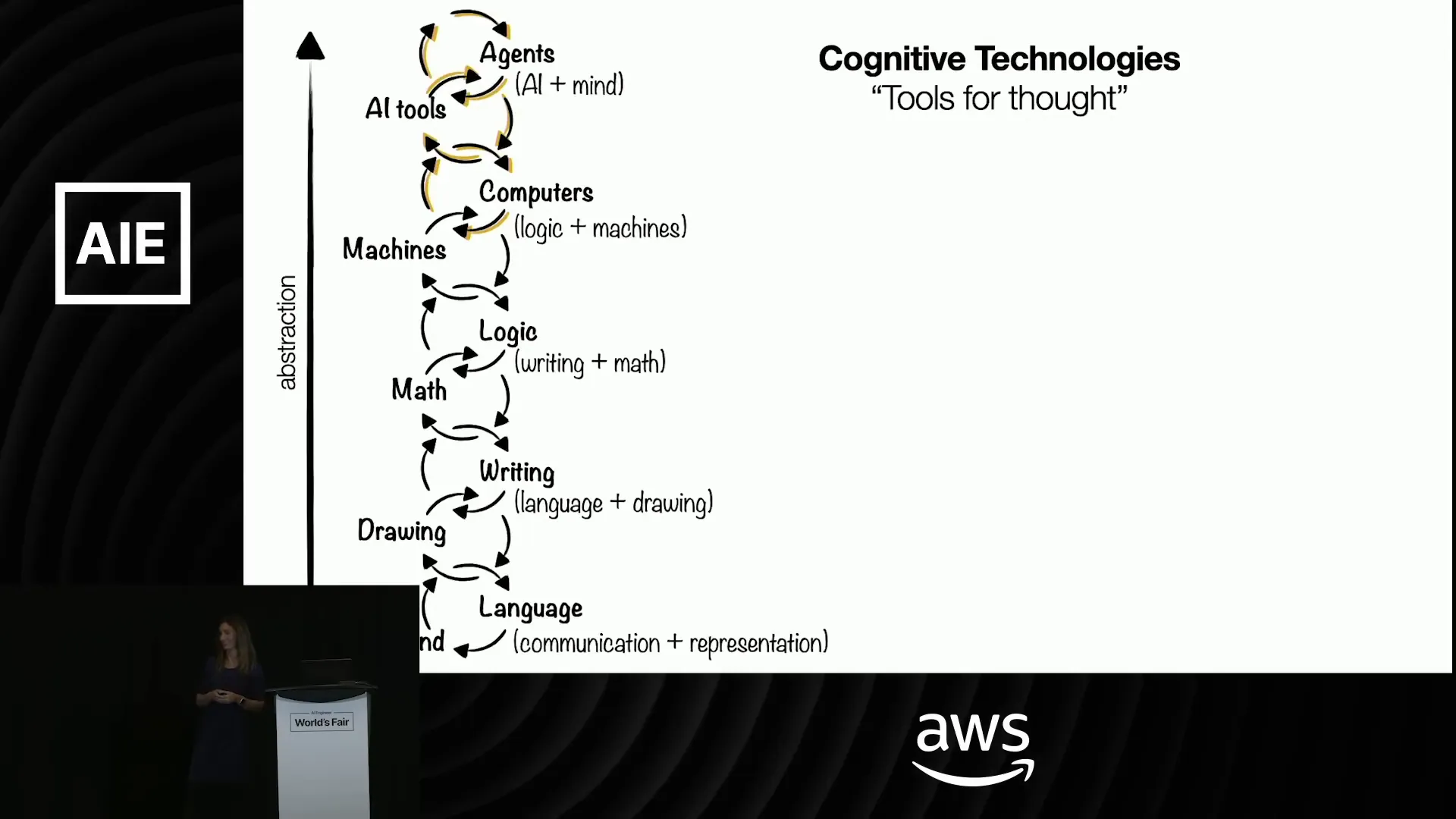

This foundational role of language in cognition has triggered a series of "cognitive technologies"—tools that enable increasingly abstract thought and collective intelligence.

Agents as Cognitive Technologies: Stabilizing Thought and Controlling Hallucinations

Danielle envisions AI agents as the next generation of cognitive technologies that will stabilize human thinking, reorganize our brains, and control hallucinations by directing attention to shared signals and filtering noise.

In this framework, agents become our collective subconscious, learning from us, redistributing skills across communities, and teaching us new knowledge when they discover it. This symbiosis requires building agents that evolve within diverse communities and reflect shared goals and values.

Measuring Success: Optimizing for Human-Centric Metrics

To truly build useful general intelligence, it is not enough to measure AI model capabilities or engagement metrics like time spent on a platform. Instead, we must focus on human-centric outcomes such as creativity, productivity, strategic thinking, and even psychological states like flow.

Optimizing these metrics will ensure that AI agents genuinely enhance human agency and intelligence rather than detract from it.

The Road Ahead: Building Agents with Models of Our Minds

Ultimately, the next step in AI development is to create agents that possess models of human minds. These models will not be explicitly programmed but will emerge from carefully designed interactions and shared environments.

Achieving this requires a common language and interfaces that enable intuitive, reciprocal interactions between humans and agents. Gathering rich human-agent interaction data will be essential for advancing these models.

Most importantly, to motivate widespread adoption and continuous improvement, AI agents must be embedded in useful products that provide tangible benefits to users. As these products become smarter, so too will we collectively become smarter, paving the way for a new era of useful general intelligence.

Conclusion: Embracing Co-Evolution for AI in Recruiting and Beyond

Danielle Perszyk’s vision for useful general intelligence reframes how we approach AI development. By focusing on reliable, embodied agents that interact with computer interfaces as humans do, and by emphasizing the social, distributed nature of intelligence, we can build AI systems that truly augment human potential.

In the context of AI in recruiting, these principles hold transformative promise. AI agents that understand recruiter goals, navigate complex digital tools reliably, and learn from ongoing interactions can streamline candidate sourcing, evaluation, and communication—freeing recruiters to focus on strategic decision-making and human connection.

The journey toward useful general intelligence is a collective one, requiring diverse communities of developers, researchers, and users to co-evolve with AI agents. As we build these agents with a shared language and aligned goals, we unlock the potential for AI to become not just smarter, but truly useful partners in our work and lives.

If you want to explore this frontier, tools like NovaAct offer a glimpse into the future—a future where AI agents and humans grow smarter together, unlocking new heights of creativity, productivity, and agency.