Everyone's Using AI and No One Knows What To Think About It — What That Means for AI in recruiting

A new study titled "AI Across America" from the Civic Health and Institutions project (researchers at Northeastern, Mass General, Rutgers, Harvard, and the University of Rochester), asked roughly 21,000 adults across all 50 states (fielded April 10–June 5) about how they use AI, how they think it will affect jobs, and what the government should do. As someone following these trends closely, I want to translate their findings into practical insight — especially for anyone thinking about AI in recruiting.

Why this survey matters

The dataset is notable because it isn't limited to technologists, startup employees, or the usual early-adopter crowd. Instead, it captures a broad cross-section of Americans. That makes the results useful for understanding how AI is trending in everyday workplaces and hiring practices — not just in Silicon Valley labs.

Big headline: roughly half of U.S. adults report using at least one major AI tool. Adoption is widespread — every state reported at least 40% usage, with West Virginia at about 33% — and most Americans expect AI to affect jobs within five years. Those simple facts change how we should think about AI in recruiting: tools are already in play, and perceptions are shifting fast.

Key findings you need to know

- Scale of adoption: ~50% of U.S. adults report using at least one major AI tool.

- Geography of impact: greatest expectations of workplace disruption are in knowledge-economy hubs (California, New York, Massachusetts) and Sunbelt states (Texas, Georgia, Florida); more agricultural and traditional industrial states expect less disruption.

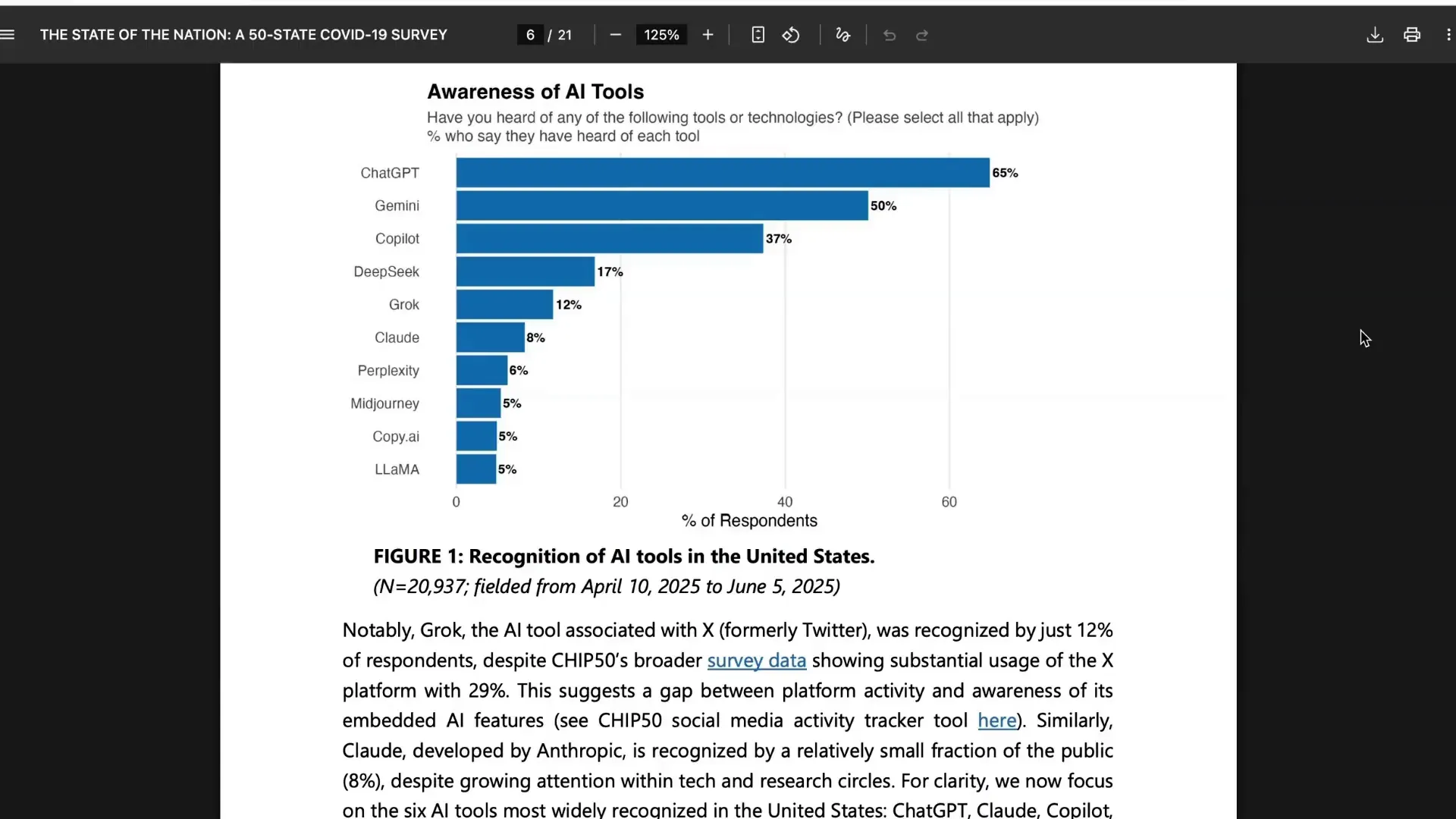

- Awareness of tools: ChatGPT is the most recognized (about two-thirds of respondents), with Google Gemini up to ~50% awareness. A surprising entrant, DeepSeek, reached 17% awareness after heavy media buzz.

- Job impact expectations: 32% expect a major impact on their job within five years, 26% expect a minor impact, and 42% expect no impact — so about six in ten expect some degree of effect.

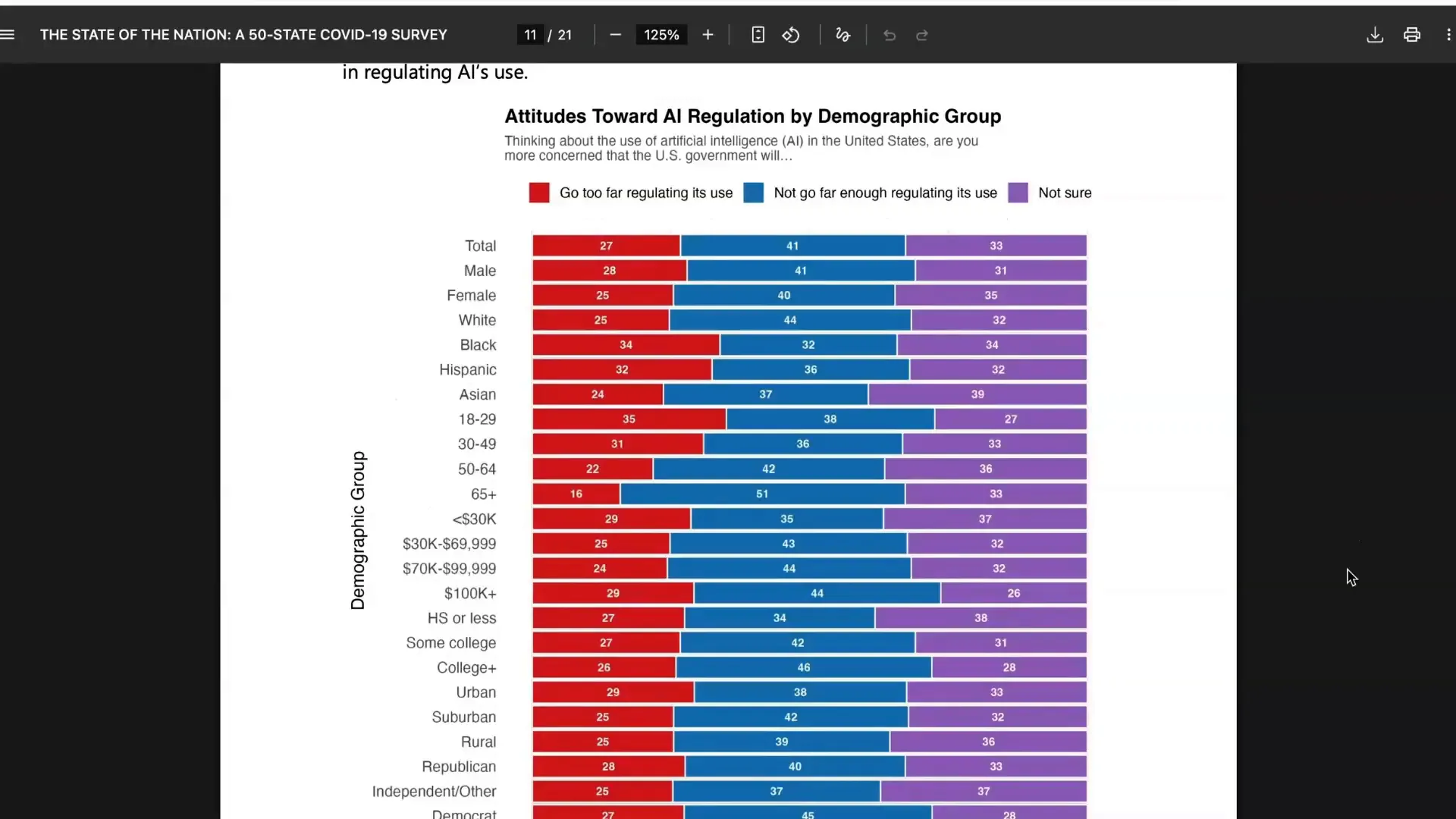

- Regulatory views: 41% worry the government will not go far enough regulating AI, 27% worry it will go too far, and roughly one-third (33%) say they are not sure how government should act.

- Partisan split: surprisingly mild. Republicans and Democrats show roughly similar concerns about over- or under-regulation, indicating the debate hasn't yet hardened along partisan lines.

Tool awareness and usage — what recruiters should note

ChatGPT remains the household name for conversational AI, but Google’s Gemini has rapidly caught up in recognition. For recruiting teams this matters: vendor and candidate familiarity influence expectations. Candidates who have used ChatGPT may expect automated drafting of job descriptions, AI-powered interview prep, or resume rewrites; hiring teams may already be using AI for sourcing, screening, or communication. Recognize that AI is now part of the candidate experience for many people.

Regulation and public uncertainty: a civic window of opportunity

One of the most revealing parts of the survey is that 33% of respondents said they were unsure whether government regulation should go too far or not far enough. Meanwhile, a plurality (41%) worry that regulation will be insufficient. That combination — concern plus uncertainty — suggests an unusual moment where policymaking, corporate governance, and public education can meaningfully shape expectations around AI.

For recruiting, this is especially important. AI in recruiting raises a host of governance questions: how do we audit algorithmic screening for bias? What transparency do we owe candidates about automated decisions? How is candidate data stored and used? The public’s openness to learning means that organizations that take a clear, principled approach will likely earn trust.

Not strictly partisan — a notable fact

Many expected strong partisan divides around regulation, but the survey shows only modest differences. Republicans were only slightly more worried about government overreach (28% vs Democrats 27%); Democrats were a bit more worried about too little regulation (45% vs Republicans 40%). This is good news for employers and HR leaders: thoughtful policy and ethical practices are not likely to be automatically framed as a political stance, which makes building consensus inside organizations easier.

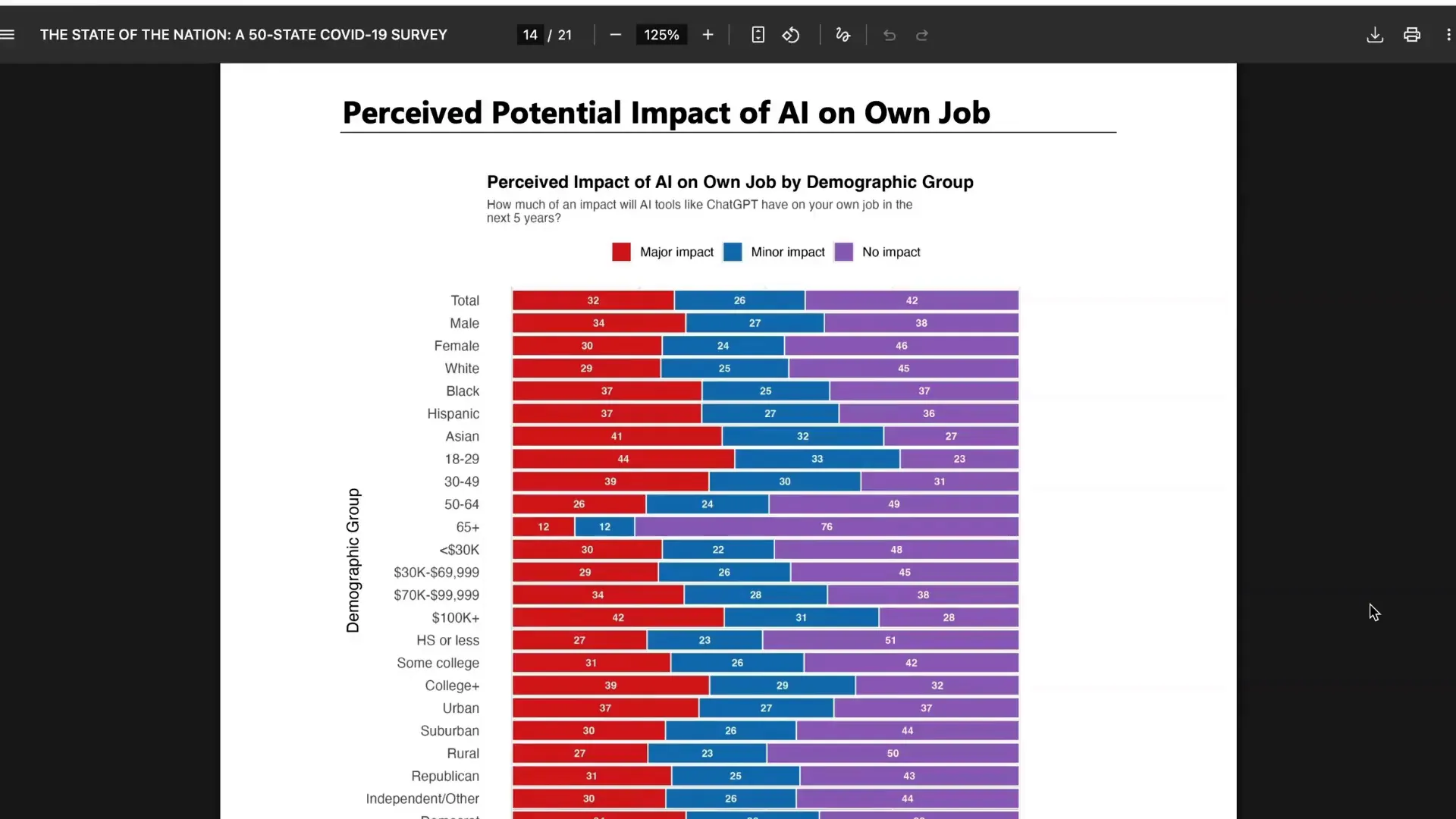

Who expects their job will change — demographic patterns

There’s significant variation across age, race, education, and income:

- Asian Americans: 41% expect a major impact.

- Households earning >$100k: 42% expect a major impact.

- Young adults (18–29): 44% expect a major impact, and 77% expect some impact.

- Older adults (65+): 76% think AI will have no impact (likely skewed by retirement).

For recruiting, these patterns provide signals about where skills training and communication should be prioritized. Younger workers expect change and may proactively upskill; higher-income knowledge workers anticipate disruption; other groups may underestimate change and need education or reskilling options.

What this means specifically for AI in recruiting

Now let’s connect the dots. With AI adoption widespread and people broadly expecting job disruption, AI in recruiting moves from hypothetical to practical. Below are the most important implications and recommended actions for hiring teams, HR leaders, and policy makers.

1) Candidate experience is already AI-tinged

Many candidates use AI tools to prepare resumes, craft cover letters, and rehearse interviews. Recruiters must recognize this shift. Screening systems that penalize AI-paraphrased resumes will create false negatives; conversely, systems that naively reward keyword optimization without human review will select for format-savvy applicants rather than best fit.

AI Agents For Recruiters, By Recruiters |

|

Supercharge Your Business |

| Learn More |

2) Transparency and consent matter

Given public uncertainty about regulation, being transparent about the use of AI in hiring is a trust-building move. Disclose where AI assists with screening, explain how data is used, and give candidates a path to request human review. These practices align with both legal caution and public expectations.

3) Audit for fairness and data quality

Use continuous auditing: evaluate selection rates across protected groups, test for disparate impact, and validate that features the model relies on are job-relevant. AI in recruiting must be monitored empirically the way other HR metrics are — this is risk management and quality control.

4) Invest in upskilling, not only automation

Since a majority expect some impact on jobs, organizations that help employees transition will reduce disruption and retain institutional knowledge. Upskilling for hiring managers — how to use AI outputs critically, not passively — should be part of any rollout.

5) Create governance that is pragmatic and participatory

Design cross-functional governance: involve HR, legal, ethics, and technical teams. Because public sentiment is fluid, include employee and candidate feedback loops. Document decision-making and maintain a living playbook for procurement, vendor assessment, and incident response.

Practical checklist: deploying AI in recruiting responsibly

- Inventory AI tools currently in use (vendor and ad-hoc tools like ChatGPT used by staff).

- Publish a short candidate-facing disclosure about any AI-driven assessments.

- Run baseline fairness and validity checks on screening models before deployment.

- Train recruiters to interpret AI outputs and override them when necessary.

- Provide a human-review option for candidates affected by automated decisions.

- Log decisions and maintain versioned documentation for audits.

Policy implications and the road ahead

The combination of high adoption, widespread expectation of workplace impact, and significant regulatory uncertainty creates an urgent policy space. Policymakers should focus on principles that translate into action: transparency, contestability (ways to challenge automated decisions), and minimum standards for data security and bias testing. Those align closely with organizations’ operational needs when implementing AI in recruiting.

Importantly, the survey’s most optimistic signal is the large share of respondents who answered "not sure" about how the government should act. That uncertainty is an opportunity: public education, stakeholder engagement, and pilot programs can shape sensible regulation before adversarial dynamics harden. Employers and HR leaders should participate in those conversations — both to advocate for workable rules and to help set industry norms.

Conclusion — a practical optimism

The "AI Across America" survey shows that AI is mainstream, Americans expect it to matter, and they’re not yet locked into fixed political stances about regulation. For those of us working with AI in recruiting, that combination is both a responsibility and an opportunity.

Responsibility because the tools already touch real opportunities in people’s lives; opportunity because the public is open to discussion and because early adopters who build transparent, fair, and human-centered hiring processes will gain trust and competitive advantage. Be deliberate: disclose, audit, train, and govern. Do those things well, and AI in recruiting can improve hiring speed and quality while protecting candidate rights and organizational reputation.

If you lead hiring operations, consider this survey a call to action: inventory your tools, make a clear policy for human oversight, and join the civic conversation. The technology will keep moving — the best path is to steer it toward fairer, clearer, and more humane hiring practices.